mirror of

https://github.com/openimsdk/open-im-server.git

synced 2025-12-04 03:12:19 +08:00

Merge branch 'mongo' into feat/mongo

This commit is contained in:

commit

6fddaf27e7

@ -1,79 +0,0 @@

|

||||

# Copyright © 2023 OpenIM. All rights reserved.

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

|

||||

#---------------Infrastructure configuration---------------------#

|

||||

etcd:

|

||||

etcdSchema: openim #默认即可

|

||||

etcdAddr: [ 127.0.0.1:2379 ] #单机部署时,默认即可

|

||||

userName:

|

||||

password:

|

||||

secret: openIM123

|

||||

|

||||

mysql:

|

||||

dbMysqlDatabaseName: admin_chat # 数据库名字 默认即可

|

||||

|

||||

# 默认管理员账号

|

||||

admin:

|

||||

defaultAccount:

|

||||

account: [ "admin1", "admin2" ]

|

||||

defaultPassword: [ "password1", "password2" ]

|

||||

openIMUserID: [ "openIM123456", "openIMAdmin" ]

|

||||

faceURL: [ "", "" ]

|

||||

nickname: [ "admin1", "admin2" ]

|

||||

level: [ 1, 100 ]

|

||||

|

||||

|

||||

adminapi:

|

||||

openImAdminApiPort: [ 10009 ] #管理后台api服务端口,默认即可,需要开放此端口或做nginx转发

|

||||

listenIP: 0.0.0.0

|

||||

|

||||

chatapi:

|

||||

openImChatApiPort: [ 10008 ] #登录注册,默认即可,需要开放此端口或做nginx转发

|

||||

listenIP: 0.0.0.0

|

||||

|

||||

rpcport: # rpc服务端口 默认即可

|

||||

openImAdminPort: [ 30200 ]

|

||||

openImChatPort: [ 30300 ]

|

||||

|

||||

|

||||

rpcregistername: #rpc注册服务名,默认即可

|

||||

openImChatName: Chat

|

||||

openImAdminCMSName: Admin

|

||||

|

||||

chat:

|

||||

codeTTL: 300 #短信验证码有效时间(秒)

|

||||

superVerificationCode: 666666 # 超级验证码

|

||||

alismsverify: #阿里云短信配置,在阿里云申请成功后修改以下四项

|

||||

accessKeyId:

|

||||

accessKeySecret:

|

||||

signName:

|

||||

verificationCodeTemplateCode:

|

||||

|

||||

|

||||

oss:

|

||||

tempDir: enterprise-temp # 临时密钥上传的目录

|

||||

dataDir: enterprise-data # 最终存放目录

|

||||

aliyun:

|

||||

endpoint: https://oss-cn-chengdu.aliyuncs.com

|

||||

accessKeyID: ""

|

||||

accessKeySecret: ""

|

||||

bucket: ""

|

||||

tencent:

|

||||

BucketURL: ""

|

||||

serviceURL: https://cos.COS_REGION.myqcloud.com

|

||||

secretID: ""

|

||||

secretKey: ""

|

||||

sessionToken: ""

|

||||

bucket: ""

|

||||

use: "minio"

|

||||

@ -1,27 +0,0 @@

|

||||

# Copyright © 2023 OpenIM. All rights reserved.

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

|

||||

#more datasource-compose.yaml

|

||||

apiVersion: 1

|

||||

|

||||

datasources:

|

||||

- name: Prometheus

|

||||

type: prometheus

|

||||

access: proxy

|

||||

orgId: 1

|

||||

url: http://127.0.0.1:9091

|

||||

basicAuth: false

|

||||

isDefault: true

|

||||

version: 1

|

||||

editable: true

|

||||

Binary file not shown.

File diff suppressed because it is too large

Load Diff

File diff suppressed because it is too large

Load Diff

@ -1,85 +0,0 @@

|

||||

# Copyright © 2023 OpenIM. All rights reserved.

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

|

||||

#more prometheus-compose.yml

|

||||

global:

|

||||

scrape_interval: 15s

|

||||

evaluation_interval: 15s

|

||||

external_labels:

|

||||

monitor: 'openIM-monitor'

|

||||

|

||||

scrape_configs:

|

||||

- job_name: 'prometheus'

|

||||

static_configs:

|

||||

- targets: ['localhost:9091']

|

||||

|

||||

- job_name: 'openIM-server'

|

||||

metrics_path: /metrics

|

||||

static_configs:

|

||||

- targets: ['localhost:10002']

|

||||

labels:

|

||||

group: 'api'

|

||||

|

||||

- targets: ['localhost:20110']

|

||||

labels:

|

||||

group: 'user'

|

||||

|

||||

- targets: ['localhost:20120']

|

||||

labels:

|

||||

group: 'friend'

|

||||

|

||||

- targets: ['localhost:20130']

|

||||

labels:

|

||||

group: 'message'

|

||||

|

||||

- targets: ['localhost:20140']

|

||||

labels:

|

||||

group: 'msg-gateway'

|

||||

|

||||

- targets: ['localhost:20150']

|

||||

labels:

|

||||

group: 'group'

|

||||

|

||||

- targets: ['localhost:20160']

|

||||

labels:

|

||||

group: 'auth'

|

||||

|

||||

- targets: ['localhost:20170']

|

||||

labels:

|

||||

group: 'push'

|

||||

|

||||

- targets: ['localhost:20120']

|

||||

labels:

|

||||

group: 'friend'

|

||||

|

||||

|

||||

- targets: ['localhost:20230']

|

||||

labels:

|

||||

group: 'conversation'

|

||||

|

||||

|

||||

- targets: ['localhost:21400', 'localhost:21401', 'localhost:21402', 'localhost:21403']

|

||||

labels:

|

||||

group: 'msg-transfer'

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

- job_name: 'node'

|

||||

scrape_interval: 8s

|

||||

static_configs:

|

||||

- targets: ['localhost:9100']

|

||||

|

||||

29

.env

29

.env

@ -29,8 +29,8 @@ PASSWORD=openIM123

|

||||

MINIO_ENDPOINT=http://172.28.0.1:10005

|

||||

|

||||

# Base URL for the application programming interface (API).

|

||||

# Default: API_URL=http://172.0.0.1:10002

|

||||

API_URL=http://172.0.0.1:10002

|

||||

# Default: API_URL=http://172.28.0.1:10002

|

||||

API_URL=http://172.28.0.1:10002

|

||||

|

||||

# Directory path for storing data files or related information.

|

||||

# Default: DATA_DIR=./

|

||||

@ -98,7 +98,14 @@ PROMETHEUS_NETWORK_ADDRESS=172.28.0.11

|

||||

# Address or hostname for the Grafana network.

|

||||

# Default: GRAFANA_NETWORK_ADDRESS=172.28.0.12

|

||||

GRAFANA_NETWORK_ADDRESS=172.28.0.12

|

||||

|

||||

|

||||

# Address or hostname for the node_exporter network.

|

||||

# Default: NODE_EXPORTER_NETWORK_ADDRESS=172.28.0.13

|

||||

NODE_EXPORTER_NETWORK_ADDRESS=172.28.0.13

|

||||

|

||||

# Address or hostname for the OpenIM admin network.

|

||||

# Default: OPENIM_ADMIN_NETWORK_ADDRESS=172.28.0.14

|

||||

OPENIM_ADMIN_FRONT_NETWORK_ADDRESS=172.28.0.14

|

||||

|

||||

# ===============================================

|

||||

# = Component Extension Configuration =

|

||||

@ -283,3 +290,19 @@ SERVER_BRANCH=main

|

||||

# Port for the OpenIM admin API.

|

||||

# Default: OPENIM_ADMIN_API_PORT=10009

|

||||

OPENIM_ADMIN_API_PORT=10009

|

||||

|

||||

# Port for the node exporter.

|

||||

# Default: NODE_EXPORTER_PORT=19100

|

||||

NODE_EXPORTER_PORT=19100

|

||||

|

||||

# Port for the prometheus.

|

||||

# Default: PROMETHEUS_PORT=19090

|

||||

PROMETHEUS_PORT=19090

|

||||

|

||||

# Port for the grafana.

|

||||

# Default: GRAFANA_PORT=3000

|

||||

GRAFANA_PORT=3000

|

||||

|

||||

# Port for the admin front.

|

||||

# Default: OPENIM_ADMIN_FRONT_PORT=11002

|

||||

OPENIM_ADMIN_FRONT_PORT=11002

|

||||

|

||||

13

.github/workflows/e2e-test.yml

vendored

13

.github/workflows/e2e-test.yml

vendored

@ -97,4 +97,15 @@ jobs:

|

||||

|

||||

- name: Exec OpenIM System uninstall

|

||||

run: |

|

||||

sudo ./scripts/install/install.sh -u

|

||||

sudo ./scripts/install/install.sh -u

|

||||

|

||||

- name: gobenchdata publish

|

||||

uses: bobheadxi/gobenchdata@v1

|

||||

with:

|

||||

PRUNE_COUNT: 30

|

||||

GO_TEST_FLAGS: -cpu 1,2

|

||||

PUBLISH: true

|

||||

PUBLISH_BRANCH: gh-pages

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.BOT_GITHUB_TOKEN }}

|

||||

continue-on-error: true

|

||||

2

.github/workflows/link-pr.yml

vendored

2

.github/workflows/link-pr.yml

vendored

@ -41,7 +41,7 @@ jobs:

|

||||

# ./*.md all markdown files in the root directory

|

||||

args: --verbose -E -i --no-progress --exclude-path './CHANGELOG' './**/*.md'

|

||||

env:

|

||||

GITHUB_TOKEN: ${{secrets.GH_PAT}}

|

||||

GITHUB_TOKEN: ${{secrets.BOT_GITHUB_TOKEN}}

|

||||

|

||||

- name: Create Issue From File

|

||||

if: env.lychee_exit_code != 0

|

||||

|

||||

@ -30,7 +30,7 @@

|

||||

</p>

|

||||

|

||||

## 🟢 扫描微信进群交流

|

||||

<img src="https://openim-1253691595.cos.ap-nanjing.myqcloud.com/WechatIMG20.jpeg" width="300">

|

||||

<img src="./docs/images/Wechat.jpg" width="300">

|

||||

|

||||

|

||||

## Ⓜ️ 关于 OpenIM

|

||||

|

||||

@ -231,7 +231,7 @@ Before you start, please make sure your changes are in demand. The best for that

|

||||

- [OpenIM Makefile Utilities](https://github.com/openimsdk/open-im-server/tree/main/docs/contrib/util-makefile.md)

|

||||

- [OpenIM Script Utilities](https://github.com/openimsdk/open-im-server/tree/main/docs/contrib/util-scripts.md)

|

||||

- [OpenIM Versioning](https://github.com/openimsdk/open-im-server/tree/main/docs/contrib/version.md)

|

||||

|

||||

- [Manage backend and monitor deployment](https://github.com/openimsdk/open-im-server/tree/main/docs/contrib/prometheus-grafana.md)

|

||||

|

||||

## :busts_in_silhouette: Community

|

||||

|

||||

|

||||

32

config/alertmanager.yml

Normal file

32

config/alertmanager.yml

Normal file

@ -0,0 +1,32 @@

|

||||

###################### AlertManager Configuration ######################

|

||||

# AlertManager configuration using environment variables

|

||||

#

|

||||

# Resolve timeout

|

||||

# SMTP configuration for sending alerts

|

||||

# Templates for email notifications

|

||||

# Routing configurations for alerts

|

||||

# Receiver configurations

|

||||

global:

|

||||

resolve_timeout: 5m

|

||||

smtp_from: alert@openim.io

|

||||

smtp_smarthost: smtp.163.com:465

|

||||

smtp_auth_username: alert@openim.io

|

||||

smtp_auth_password: YOURAUTHPASSWORD

|

||||

smtp_require_tls: false

|

||||

smtp_hello: xxx监控告警

|

||||

|

||||

templates:

|

||||

- /etc/alertmanager/email.tmpl

|

||||

|

||||

route:

|

||||

group_wait: 5s

|

||||

group_interval: 5s

|

||||

repeat_interval: 5m

|

||||

receiver: email

|

||||

receivers:

|

||||

- name: email

|

||||

email_configs:

|

||||

- to: {EMAIL_TO:-'alert@example.com'}

|

||||

html: '{{ template "email.to.html" . }}'

|

||||

headers: { Subject: "[OPENIM-SERVER]Alarm" }

|

||||

send_resolved: true

|

||||

@ -198,7 +198,7 @@ rpcRegisterName:

|

||||

# Whether to output in json format

|

||||

# Whether to include stack trace in logs

|

||||

log:

|

||||

storageLocation: ./logs/

|

||||

storageLocation: ../logs/

|

||||

rotationTime: 24

|

||||

remainRotationCount: 2

|

||||

remainLogLevel: 6

|

||||

@ -382,7 +382,7 @@ callback:

|

||||

# The number of Prometheus ports per service needs to correspond to rpcPort

|

||||

# The number of ports needs to be consistent with msg_transfer_service_num in script/path_info.sh

|

||||

prometheus:

|

||||

enable: true

|

||||

enable: false

|

||||

prometheusUrl: "https://openim.prometheus"

|

||||

apiPrometheusPort: [20100]

|

||||

userPrometheusPort: [ 20110 ]

|

||||

|

||||

16

config/email.tmpl

Normal file

16

config/email.tmpl

Normal file

@ -0,0 +1,16 @@

|

||||

{{ define "email.to.html" }}

|

||||

{{ range .Alerts }}

|

||||

<!-- Begin of OpenIM Alert -->

|

||||

<div style="border:1px solid #ccc; padding:10px; margin-bottom:10px;">

|

||||

<h3>OpenIM Alert</h3>

|

||||

<p><strong>Alert Program:</strong> Prometheus Alert</p>

|

||||

<p><strong>Severity Level:</strong> {{ .Labels.severity }}</p>

|

||||

<p><strong>Alert Type:</strong> {{ .Labels.alertname }}</p>

|

||||

<p><strong>Affected Host:</strong> {{ .Labels.instance }}</p>

|

||||

<p><strong>Affected Service:</strong> {{ .Labels.job }}</p>

|

||||

<p><strong>Alert Subject:</strong> {{ .Annotations.summary }}</p>

|

||||

<p><strong>Trigger Time:</strong> {{ .StartsAt.Format "2006-01-02 15:04:05" }}</p>

|

||||

</div>

|

||||

<!-- End of OpenIM Alert -->

|

||||

{{ end }}

|

||||

{{ end }}

|

||||

11

config/instance-down-rules.yml

Normal file

11

config/instance-down-rules.yml

Normal file

@ -0,0 +1,11 @@

|

||||

groups:

|

||||

- name: instance_down

|

||||

rules:

|

||||

- alert: InstanceDown

|

||||

expr: up == 0

|

||||

for: 1m

|

||||

labels:

|

||||

severity: critical

|

||||

annotations:

|

||||

summary: "Instance {{ $labels.instance }} down"

|

||||

description: "{{ $labels.instance }} of job {{ $labels.job }} has been down for more than 1 minutes."

|

||||

1460

config/prometheus-dashboard.yaml

Normal file

1460

config/prometheus-dashboard.yaml

Normal file

File diff suppressed because it is too large

Load Diff

85

config/prometheus.yml

Normal file

85

config/prometheus.yml

Normal file

@ -0,0 +1,85 @@

|

||||

# my global config

|

||||

global:

|

||||

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

|

||||

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

|

||||

# scrape_timeout is set to the global default (10s).

|

||||

|

||||

# Alertmanager configuration

|

||||

alerting:

|

||||

alertmanagers:

|

||||

- static_configs:

|

||||

- targets: ['172.28.0.1:19093']

|

||||

|

||||

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

|

||||

rule_files:

|

||||

- "instance-down-rules.yml"

|

||||

# - "first_rules.yml"

|

||||

# - "second_rules.yml"

|

||||

|

||||

# A scrape configuration containing exactly one endpoint to scrape:

|

||||

# Here it's Prometheus itself.

|

||||

scrape_configs:

|

||||

# The job name is added as a label "job='job_name'"" to any timeseries scraped from this config.

|

||||

# Monitored information captured by prometheus

|

||||

- job_name: 'node-exporter'

|

||||

static_configs:

|

||||

- targets: [ '172.28.0.1:19100' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

|

||||

# prometheus fetches application services

|

||||

- job_name: 'openimserver-openim-api'

|

||||

static_configs:

|

||||

- targets: [ '172.28.0.1:20100' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-msggateway'

|

||||

static_configs:

|

||||

- targets: [ '172.28.0.1:20140' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-msgtransfer'

|

||||

static_configs:

|

||||

- targets: [ 172.28.0.1:21400, 172.28.0.1:21401, 172.28.0.1:21402, 172.28.0.1:21403 ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-push'

|

||||

static_configs:

|

||||

- targets: [ '172.28.0.1:20170' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-auth'

|

||||

static_configs:

|

||||

- targets: [ '172.28.0.1:20160' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-conversation'

|

||||

static_configs:

|

||||

- targets: [ '172.28.0.1:20230' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-friend'

|

||||

static_configs:

|

||||

- targets: [ '172.28.0.1:20120' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-group'

|

||||

static_configs:

|

||||

- targets: [ '172.28.0.1:20150' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-msg'

|

||||

static_configs:

|

||||

- targets: [ '172.28.0.1:20130' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-third'

|

||||

static_configs:

|

||||

- targets: [ '172.28.0.1:21301' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-user'

|

||||

static_configs:

|

||||

- targets: [ '172.28.0.1:20110' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

32

deployments/templates/alertmanager.yml

Normal file

32

deployments/templates/alertmanager.yml

Normal file

@ -0,0 +1,32 @@

|

||||

###################### AlertManager Configuration ######################

|

||||

# AlertManager configuration using environment variables

|

||||

#

|

||||

# Resolve timeout

|

||||

# SMTP configuration for sending alerts

|

||||

# Templates for email notifications

|

||||

# Routing configurations for alerts

|

||||

# Receiver configurations

|

||||

global:

|

||||

resolve_timeout: ${ALERTMANAGER_RESOLVE_TIMEOUT}

|

||||

smtp_from: ${ALERTMANAGER_SMTP_FROM}

|

||||

smtp_smarthost: ${ALERTMANAGER_SMTP_SMARTHOST}

|

||||

smtp_auth_username: ${ALERTMANAGER_SMTP_AUTH_USERNAME}

|

||||

smtp_auth_password: ${ALERTMANAGER_SMTP_AUTH_PASSWORD}

|

||||

smtp_require_tls: ${ALERTMANAGER_SMTP_REQUIRE_TLS}

|

||||

smtp_hello: ${ALERTMANAGER_SMTP_HELLO}

|

||||

|

||||

templates:

|

||||

- /etc/alertmanager/email.tmpl

|

||||

|

||||

route:

|

||||

group_wait: 5s

|

||||

group_interval: 5s

|

||||

repeat_interval: 5m

|

||||

receiver: email

|

||||

receivers:

|

||||

- name: email

|

||||

email_configs:

|

||||

- to: ${ALERTMANAGER_EMAIL_TO}

|

||||

html: '{{ template "email.to.html" . }}'

|

||||

headers: { Subject: "[OPENIM-SERVER]Alarm" }

|

||||

send_resolved: true

|

||||

@ -94,12 +94,22 @@ OPENIM_CHAT_NETWORK_ADDRESS=${OPENIM_CHAT_NETWORK_ADDRESS}

|

||||

# Address or hostname for the Prometheus network.

|

||||

# Default: PROMETHEUS_NETWORK_ADDRESS=172.28.0.11

|

||||

PROMETHEUS_NETWORK_ADDRESS=${PROMETHEUS_NETWORK_ADDRESS}

|

||||

|

||||

|

||||

# Address or hostname for the Grafana network.

|

||||

# Default: GRAFANA_NETWORK_ADDRESS=172.28.0.12

|

||||

GRAFANA_NETWORK_ADDRESS=${GRAFANA_NETWORK_ADDRESS}

|

||||

|

||||

|

||||

|

||||

# Address or hostname for the node_exporter network.

|

||||

# Default: NODE_EXPORTER_NETWORK_ADDRESS=172.28.0.13

|

||||

NODE_EXPORTER_NETWORK_ADDRESS=${NODE_EXPORTER_NETWORK_ADDRESS}

|

||||

|

||||

# Address or hostname for the OpenIM admin network.

|

||||

# Default: OPENIM_ADMIN_NETWORK_ADDRESS=172.28.0.14

|

||||

OPENIM_ADMIN_FRONT_NETWORK_ADDRESS=${OPENIM_ADMIN_FRONT_NETWORK_ADDRESS}

|

||||

|

||||

# Address or hostname for the alertmanager network.

|

||||

# Default: ALERT_MANAGER_NETWORK_ADDRESS=172.28.0.15

|

||||

ALERT_MANAGER_NETWORK_ADDRESS=${ALERT_MANAGER_NETWORK_ADDRESS}

|

||||

# ===============================================

|

||||

# = Component Extension Configuration =

|

||||

# ===============================================

|

||||

@ -215,7 +225,7 @@ PROMETHEUS_PORT=${PROMETHEUS_PORT}

|

||||

GRAFANA_ADDRESS=${GRAFANA_NETWORK_ADDRESS}

|

||||

|

||||

# Port on which Grafana service is running.

|

||||

# Default: GRAFANA_PORT=3000

|

||||

# Default: GRAFANA_PORT=13000

|

||||

GRAFANA_PORT=${GRAFANA_PORT}

|

||||

|

||||

# ======================================

|

||||

@ -283,3 +293,23 @@ SERVER_BRANCH=${SERVER_BRANCH}

|

||||

# Port for the OpenIM admin API.

|

||||

# Default: OPENIM_ADMIN_API_PORT=10009

|

||||

OPENIM_ADMIN_API_PORT=${OPENIM_ADMIN_API_PORT}

|

||||

|

||||

# Port for the node exporter.

|

||||

# Default: NODE_EXPORTER_PORT=19100

|

||||

NODE_EXPORTER_PORT=${NODE_EXPORTER_PORT}

|

||||

|

||||

# Port for the prometheus.

|

||||

# Default: PROMETHEUS_PORT=19090

|

||||

PROMETHEUS_PORT=${PROMETHEUS_PORT}

|

||||

|

||||

# Port for the grafana.

|

||||

# Default: GRAFANA_PORT=13000

|

||||

GRAFANA_PORT=${GRAFANA_PORT}

|

||||

|

||||

# Port for the admin front.

|

||||

# Default: OPENIM_ADMIN_FRONT_PORT=11002

|

||||

OPENIM_ADMIN_FRONT_PORT=${OPENIM_ADMIN_FRONT_PORT}

|

||||

|

||||

# Port for the alertmanager.

|

||||

# Default: ALERT_MANAGER_PORT=19093

|

||||

ALERT_MANAGER_PORT=${ALERT_MANAGER_PORT}

|

||||

85

deployments/templates/prometheus.yml

Normal file

85

deployments/templates/prometheus.yml

Normal file

@ -0,0 +1,85 @@

|

||||

# my global config

|

||||

global:

|

||||

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

|

||||

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

|

||||

# scrape_timeout is set to the global default (10s).

|

||||

|

||||

# Alertmanager configuration

|

||||

alerting:

|

||||

alertmanagers:

|

||||

- static_configs:

|

||||

- targets: ['${ALERT_MANAGER_ADDRESS}:${ALERT_MANAGER_PORT}']

|

||||

|

||||

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

|

||||

rule_files:

|

||||

- "instance-down-rules.yml"

|

||||

# - "first_rules.yml"

|

||||

# - "second_rules.yml"

|

||||

|

||||

# A scrape configuration containing exactly one endpoint to scrape:

|

||||

# Here it's Prometheus itself.

|

||||

scrape_configs:

|

||||

# The job name is added as a label "job='job_name'"" to any timeseries scraped from this config.

|

||||

# Monitored information captured by prometheus

|

||||

- job_name: 'node-exporter'

|

||||

static_configs:

|

||||

- targets: [ '${NODE_EXPORTER_ADDRESS}:${NODE_EXPORTER_PORT}' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

|

||||

# prometheus fetches application services

|

||||

- job_name: 'openimserver-openim-api'

|

||||

static_configs:

|

||||

- targets: [ '${OPENIM_SERVER_ADDRESS}:${API_PROM_PORT}' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-msggateway'

|

||||

static_configs:

|

||||

- targets: [ '${OPENIM_SERVER_ADDRESS}:${MSG_GATEWAY_PROM_PORT}' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-msgtransfer'

|

||||

static_configs:

|

||||

- targets: [ ${MSG_TRANSFER_PROM_ADDRESS_PORT} ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-push'

|

||||

static_configs:

|

||||

- targets: [ '${OPENIM_SERVER_ADDRESS}:${PUSH_PROM_PORT}' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-auth'

|

||||

static_configs:

|

||||

- targets: [ '${OPENIM_SERVER_ADDRESS}:${AUTH_PROM_PORT}' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-conversation'

|

||||

static_configs:

|

||||

- targets: [ '${OPENIM_SERVER_ADDRESS}:${CONVERSATION_PROM_PORT}' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-friend'

|

||||

static_configs:

|

||||

- targets: [ '${OPENIM_SERVER_ADDRESS}:${FRIEND_PROM_PORT}' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-group'

|

||||

static_configs:

|

||||

- targets: [ '${OPENIM_SERVER_ADDRESS}:${GROUP_PROM_PORT}' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-msg'

|

||||

static_configs:

|

||||

- targets: [ '${OPENIM_SERVER_ADDRESS}:${MESSAGE_PROM_PORT}' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-third'

|

||||

static_configs:

|

||||

- targets: [ '${OPENIM_SERVER_ADDRESS}:${THIRD_PROM_PORT}' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-user'

|

||||

static_configs:

|

||||

- targets: [ '${OPENIM_SERVER_ADDRESS}:${USER_PROM_PORT}' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

@ -67,17 +67,17 @@ services:

|

||||

ipv4_address: ${REDIS_NETWORK_ADDRESS}

|

||||

|

||||

zookeeper:

|

||||

image: bitnami/zookeeper:3.8

|

||||

container_name: zookeeper

|

||||

ports:

|

||||

- "${ZOOKEEPER_PORT}:2181"

|

||||

volumes:

|

||||

- "/etc/localtime:/etc/localtime"

|

||||

environment:

|

||||

- ALLOW_ANONYMOUS_LOGIN=yes

|

||||

- TZ="Asia/Shanghai"

|

||||

restart: always

|

||||

networks:

|

||||

image: bitnami/zookeeper:3.8

|

||||

container_name: zookeeper

|

||||

ports:

|

||||

- "${ZOOKEEPER_PORT}:2181"

|

||||

volumes:

|

||||

- "/etc/localtime:/etc/localtime"

|

||||

environment:

|

||||

- ALLOW_ANONYMOUS_LOGIN=yes

|

||||

- TZ="Asia/Shanghai"

|

||||

restart: always

|

||||

networks:

|

||||

server:

|

||||

ipv4_address: ${ZOOKEEPER_NETWORK_ADDRESS}

|

||||

|

||||

@ -100,6 +100,7 @@ services:

|

||||

- KAFKA_CFG_NODE_ID=0

|

||||

- KAFKA_CFG_PROCESS_ROLES=controller,broker

|

||||

- KAFKA_CFG_CONTROLLER_QUORUM_VOTERS=0@<your_host>:9093

|

||||

- KAFKA_HEAP_OPTS:"-Xmx256m -Xms256m"

|

||||

- KAFKA_CFG_LISTENERS=PLAINTEXT://:9092,CONTROLLER://:9093,EXTERNAL://:9094

|

||||

- KAFKA_CFG_ADVERTISED_LISTENERS=PLAINTEXT://kafka:9092,EXTERNAL://${DOCKER_BRIDGE_GATEWAY}:${KAFKA_PORT}

|

||||

- KAFKA_CFG_LISTENER_SECURITY_PROTOCOL_MAP=CONTROLLER:PLAINTEXT,EXTERNAL:PLAINTEXT,PLAINTEXT:PLAINTEXT

|

||||

@ -142,3 +143,68 @@ services:

|

||||

server:

|

||||

ipv4_address: ${OPENIM_WEB_NETWORK_ADDRESS}

|

||||

|

||||

openim-admin:

|

||||

image: ${IMAGE_REGISTRY}/openim-admin-front:v3.4.0

|

||||

# image: ghcr.io/openimsdk/openim-admin-front:v3.4.0

|

||||

# image: registry.cn-hangzhou.aliyuncs.com/openimsdk/openim-admin-front:v3.4.0

|

||||

# image: openim/openim-admin-front:v3.4.0

|

||||

container_name: openim-admin

|

||||

restart: always

|

||||

ports:

|

||||

- "${OPENIM_ADMIN_FRONT_PORT}:80"

|

||||

networks:

|

||||

server:

|

||||

ipv4_address: ${OPENIM_ADMIN_FRONT_NETWORK_ADDRESS}

|

||||

|

||||

prometheus:

|

||||

image: prom/prometheus

|

||||

container_name: prometheus

|

||||

hostname: prometheus

|

||||

restart: always

|

||||

volumes:

|

||||

- ./config/prometheus.yml:/etc/prometheus/prometheus.yml

|

||||

- ./config/instance-down-rules.yml:/etc/prometheus/instance-down-rules.yml

|

||||

ports:

|

||||

- "${PROMETHEUS_PORT}:9090"

|

||||

networks:

|

||||

server:

|

||||

ipv4_address: ${PROMETHEUS_NETWORK_ADDRESS}

|

||||

|

||||

alertmanager:

|

||||

image: prom/alertmanager

|

||||

container_name: alertmanager

|

||||

hostname: alertmanager

|

||||

restart: always

|

||||

volumes:

|

||||

- ./config/alertmanager.yml:/etc/alertmanager/alertmanager.yml

|

||||

- ./config/email.tmpl:/etc/alertmanager/email.tmpl

|

||||

ports:

|

||||

- "${ALERT_MANAGER_PORT}:9093"

|

||||

networks:

|

||||

server:

|

||||

ipv4_address: ${ALERT_MANAGER_NETWORK_ADDRESS}

|

||||

|

||||

grafana:

|

||||

image: grafana/grafana

|

||||

container_name: grafana

|

||||

hostname: grafana

|

||||

user: root

|

||||

restart: always

|

||||

ports:

|

||||

- "${GRAFANA_PORT}:3000"

|

||||

volumes:

|

||||

- ${DATA_DIR}/components/grafana:/var/lib/grafana

|

||||

networks:

|

||||

server:

|

||||

ipv4_address: ${GRAFANA_NETWORK_ADDRESS}

|

||||

|

||||

node-exporter:

|

||||

image: quay.io/prometheus/node-exporter

|

||||

container_name: node-exporter

|

||||

hostname: node-exporter

|

||||

restart: always

|

||||

ports:

|

||||

- "${NODE_EXPORTER_PORT}:9100"

|

||||

networks:

|

||||

server:

|

||||

ipv4_address: ${NODE_EXPORTER_NETWORK_ADDRESS}

|

||||

|

||||

@ -150,7 +150,7 @@ For convenience, configuration through modifying environment variables is recomm

|

||||

+ **Description**: API address.

|

||||

+ **Note**: If the server has an external IP, it will be automatically obtained. For internal networks, set this variable to the IP serving internally.

|

||||

|

||||

```

|

||||

```bash

|

||||

export API_URL="http://ip:10002"

|

||||

```

|

||||

|

||||

@ -412,7 +412,7 @@ Configuration for Grafana, including its port and address.

|

||||

|

||||

| Parameter | Example Value | Description |

|

||||

| --------------- | -------------------------- | --------------------- |

|

||||

| GRAFANA_PORT | "3000" | Port used by Grafana. |

|

||||

| GRAFANA_PORT | "13000" | Port used by Grafana. |

|

||||

| GRAFANA_ADDRESS | "${DOCKER_BRIDGE_GATEWAY}" | Address for Grafana. |

|

||||

|

||||

### 2.16. <a name='RPCPortConfigurationVariables'></a>RPC Port Configuration Variables

|

||||

|

||||

323

docs/contrib/prometheus-grafana.md

Normal file

323

docs/contrib/prometheus-grafana.md

Normal file

@ -0,0 +1,323 @@

|

||||

# Deployment and Design of OpenIM's Management Backend and Monitoring

|

||||

|

||||

<!-- vscode-markdown-toc -->

|

||||

* 1. [Source Code & Docker](#SourceCodeDocker)

|

||||

* 1.1. [Deployment](#Deployment)

|

||||

* 1.2. [Configuration](#Configuration)

|

||||

* 1.3. [Monitoring Running in Docker Guide](#MonitoringRunninginDockerGuide)

|

||||

* 1.3.1. [Introduction](#Introduction)

|

||||

* 1.3.2. [Prerequisites](#Prerequisites)

|

||||

* 1.3.3. [Step 1: Clone the Repository](#Step1:ClonetheRepository)

|

||||

* 1.3.4. [Step 2: Start Docker Compose](#Step2:StartDockerCompose)

|

||||

* 1.3.5. [Step 3: Use the OpenIM Web Interface](#Step3:UsetheOpenIMWebInterface)

|

||||

* 1.3.6. [Running Effect](#RunningEffect)

|

||||

* 1.3.7. [Step 4: Access the Admin Panel](#Step4:AccesstheAdminPanel)

|

||||

* 1.3.8. [Step 5: Access the Monitoring Interface](#Step5:AccesstheMonitoringInterface)

|

||||

* 1.3.9. [Next Steps](#NextSteps)

|

||||

* 1.3.10. [Troubleshooting](#Troubleshooting)

|

||||

* 2. [Kubernetes](#Kubernetes)

|

||||

* 2.1. [Middleware Monitoring](#MiddlewareMonitoring)

|

||||

* 2.2. [Custom OpenIM Metrics](#CustomOpenIMMetrics)

|

||||

* 2.3. [Node Exporter](#NodeExporter)

|

||||

* 3. [Setting Up and Configuring AlertManager Using Environment Variables and `make init`](#SettingUpandConfiguringAlertManagerUsingEnvironmentVariablesandmakeinit)

|

||||

* 3.1. [Introduction](#Introduction-1)

|

||||

* 3.2. [Prerequisites](#Prerequisites-1)

|

||||

* 3.3. [Configuration Steps](#ConfigurationSteps)

|

||||

* 3.3.1. [Exporting Environment Variables](#ExportingEnvironmentVariables)

|

||||

* 3.3.2. [Initializing AlertManager](#InitializingAlertManager)

|

||||

* 3.3.3. [Key Configuration Fields](#KeyConfigurationFields)

|

||||

* 3.3.4. [Configuring SMTP Authentication Password](#ConfiguringSMTPAuthenticationPassword)

|

||||

* 3.3.5. [Useful Links for Common Email Servers](#UsefulLinksforCommonEmailServers)

|

||||

* 3.4. [Conclusion](#Conclusion)

|

||||

|

||||

<!-- vscode-markdown-toc-config

|

||||

numbering=true

|

||||

autoSave=true

|

||||

/vscode-markdown-toc-config -->

|

||||

<!-- /vscode-markdown-toc -->

|

||||

|

||||

OpenIM offers various flexible deployment options to suit different environments and requirements. Here is a simplified and optimized description of these deployment options:

|

||||

|

||||

1. Source Code Deployment:

|

||||

+ **Regular Source Code Deployment**: Deployment using the `nohup` method. This is a basic deployment method suitable for development and testing environments. For details, refer to the [Regular Source Code Deployment Guide](https://docs.openim.io/).

|

||||

+ **Production-Level Deployment**: Deployment using the `system` method, more suitable for production environments. This method provides higher stability and reliability. For details, refer to the [Production-Level Deployment Guide](https://docs.openim.io/guides/gettingStarted/install-openim-linux-system).

|

||||

2. Cluster Deployment:

|

||||

+ **Kubernetes Deployment**: Provides two deployment methods, including deployment through Helm and sealos. This is suitable for environments that require high availability and scalability. Specific methods can be found in the [Kubernetes Deployment Guide](https://docs.openim.io/guides/gettingStarted/k8s-deployment).

|

||||

3. Docker Deployment:

|

||||

+ **Regular Docker Deployment**: Suitable for quick deployments and small projects. For detailed information, refer to the [Docker Deployment Guide](https://docs.openim.io/guides/gettingStarted/dockerCompose).

|

||||

+ **Docker Compose Deployment**: Provides more convenient service management and configuration, suitable for complex multi-container applications.

|

||||

|

||||

Next, we will introduce the specific steps, monitoring, and management backend configuration for each of these deployment methods, as well as usage tips to help you choose the most suitable deployment option according to your needs.

|

||||

|

||||

## 1. <a name='SourceCodeDocker'></a>Source Code & Docker

|

||||

|

||||

### 1.1. <a name='Deployment'></a>Deployment

|

||||

|

||||

OpenIM deploys openim-server and openim-chat from source code, while other components are deployed via Docker.

|

||||

|

||||

For Docker deployment, you can deploy all components with a single command using the [openimsdk/openim-docker](https://github.com/openimsdk/openim-docker) repository. The deployment configuration can be found in the [environment.sh](https://github.com/openimsdk/open-im-server/blob/main/scripts/install/environment.sh) document, which provides information on how to learn and familiarize yourself with various environment variables.

|

||||

|

||||

For Prometheus, it is not enabled by default. To enable it, set the environment variable before executing `make init`:

|

||||

|

||||

```bash

|

||||

export PROMETHEUS_ENABLE=true # Default is false

|

||||

```

|

||||

|

||||

Then, execute:

|

||||

|

||||

```bash

|

||||

make init

|

||||

docker compose up -d

|

||||

```

|

||||

|

||||

### 1.2. <a name='Configuration'></a>Configuration

|

||||

|

||||

To configure Prometheus data sources in Grafana, follow these steps:

|

||||

|

||||

1. **Log in to Grafana**: First, open your web browser and access the Grafana URL. If you haven't changed the port, the address is typically [http://localhost:13000](http://localhost:13000/).

|

||||

|

||||

2. **Log in with default credentials**: Grafana's default username and password are both `admin`. You will be prompted to change the password on your first login.

|

||||

|

||||

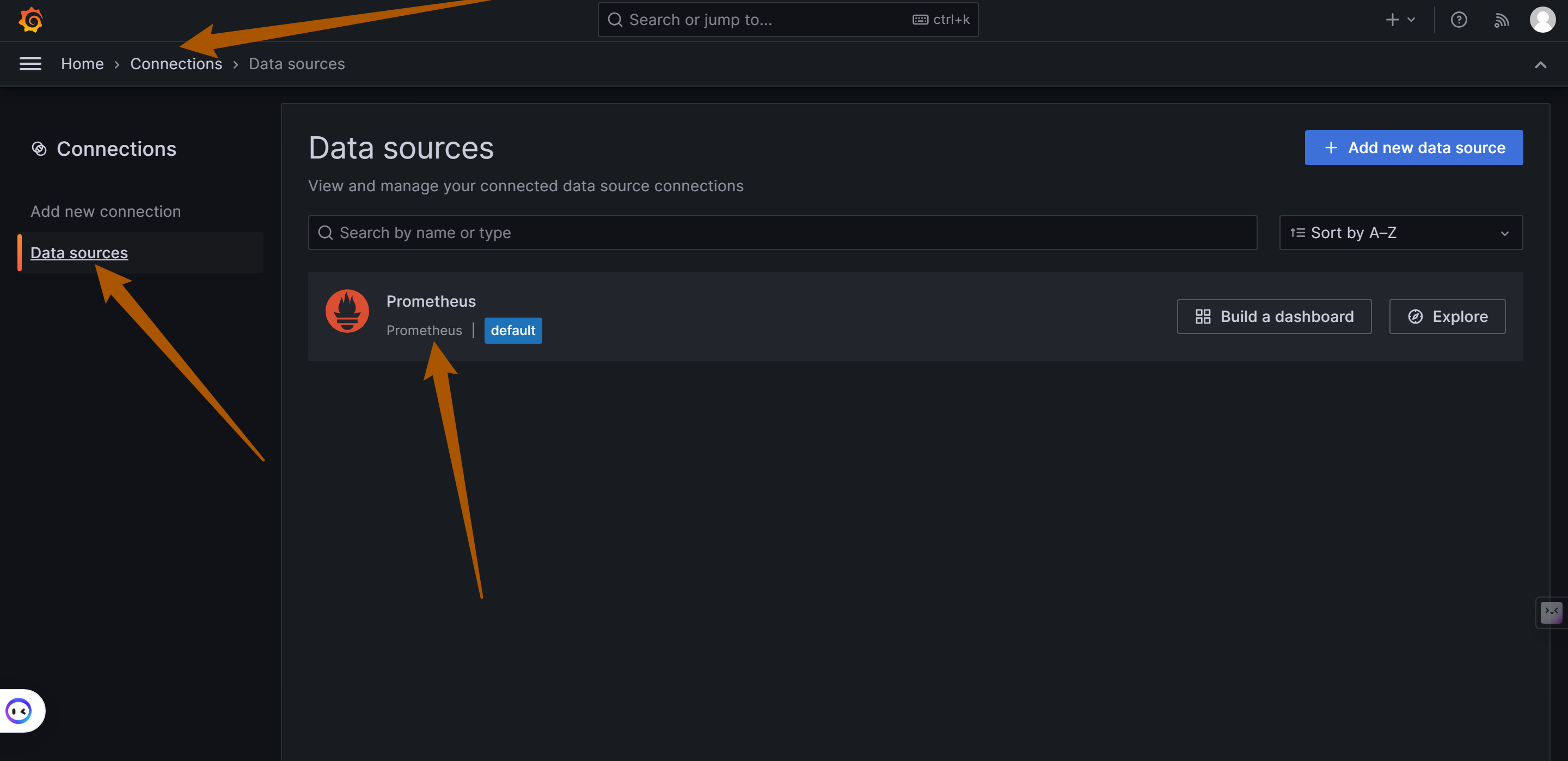

3. **Access Data Sources Settings**:

|

||||

|

||||

+ In the left menu of Grafana, look for and click the "gear" icon representing "Configuration."

|

||||

+ In the configuration menu, select "Data Sources."

|

||||

|

||||

4. **Add a New Data Source**:

|

||||

|

||||

+ On the Data Sources page, click the "Add data source" button.

|

||||

+ In the list, find and select "Prometheus."

|

||||

|

||||

|

||||

|

||||

Click `Add New connection` to add more data sources, such as Loki (responsible for log storage and query processing).

|

||||

|

||||

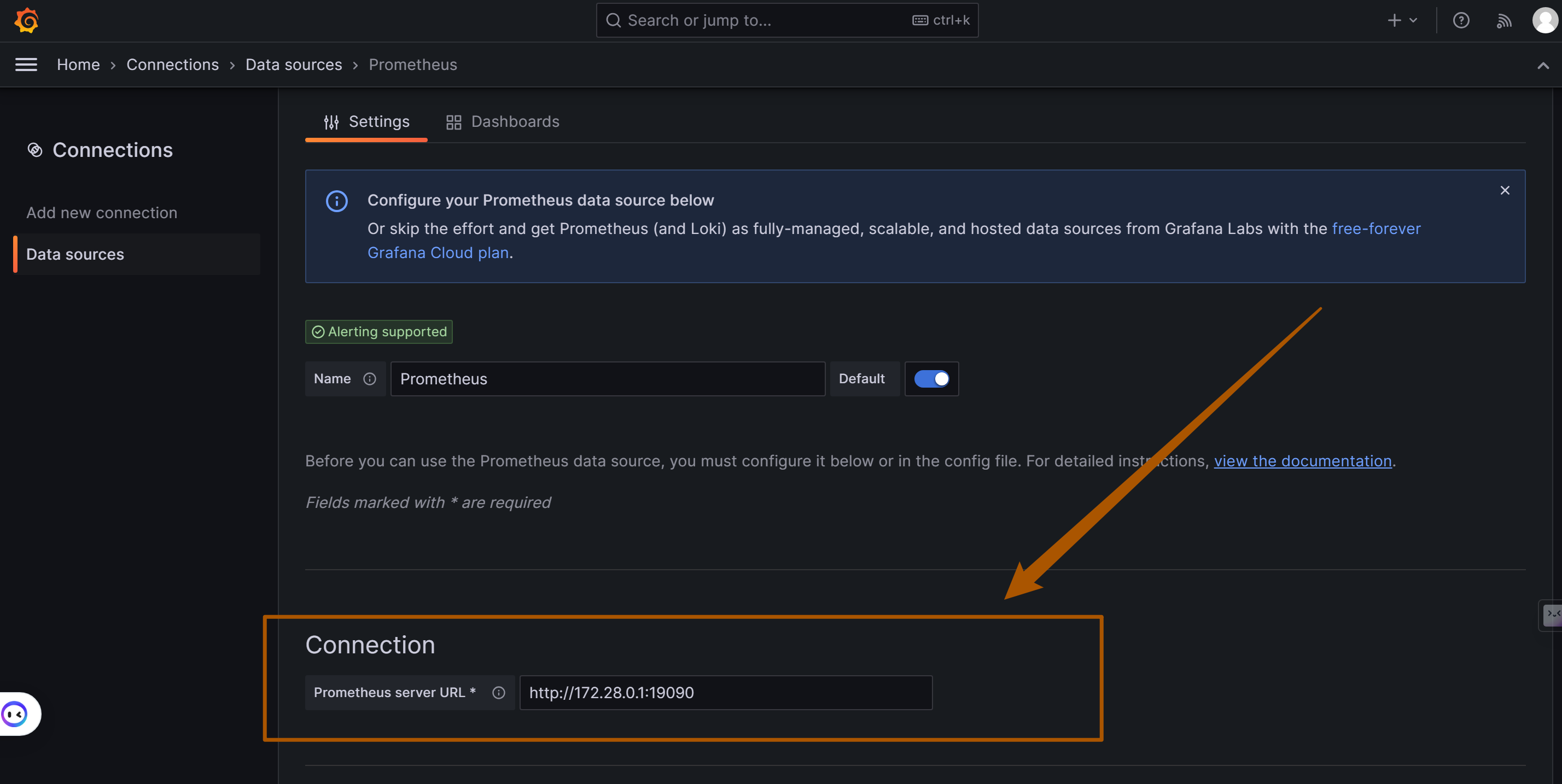

5. **Configure the Prometheus Data Source**:

|

||||

|

||||

+ On the configuration page, fill in the details of the Prometheus server. This typically includes the URL of the Prometheus service (e.g., if Prometheus is running on the same machine as OpenIM, the URL might be `http://172.28.0.1:19090`, with the address matching the `DOCKER_BRIDGE_GATEWAY` variable address). OpenIM and the components are linked via a gateway. The default port used by OpenIM is `19090`.

|

||||

+ Adjust other settings as needed, such as authentication and TLS settings.

|

||||

|

||||

|

||||

|

||||

6. **Save and Test**:

|

||||

|

||||

+ After completing the configuration, click the "Save & Test" button to ensure that Grafana can successfully connect to Prometheus.

|

||||

|

||||

**Importing Dashboards in Grafana**

|

||||

|

||||

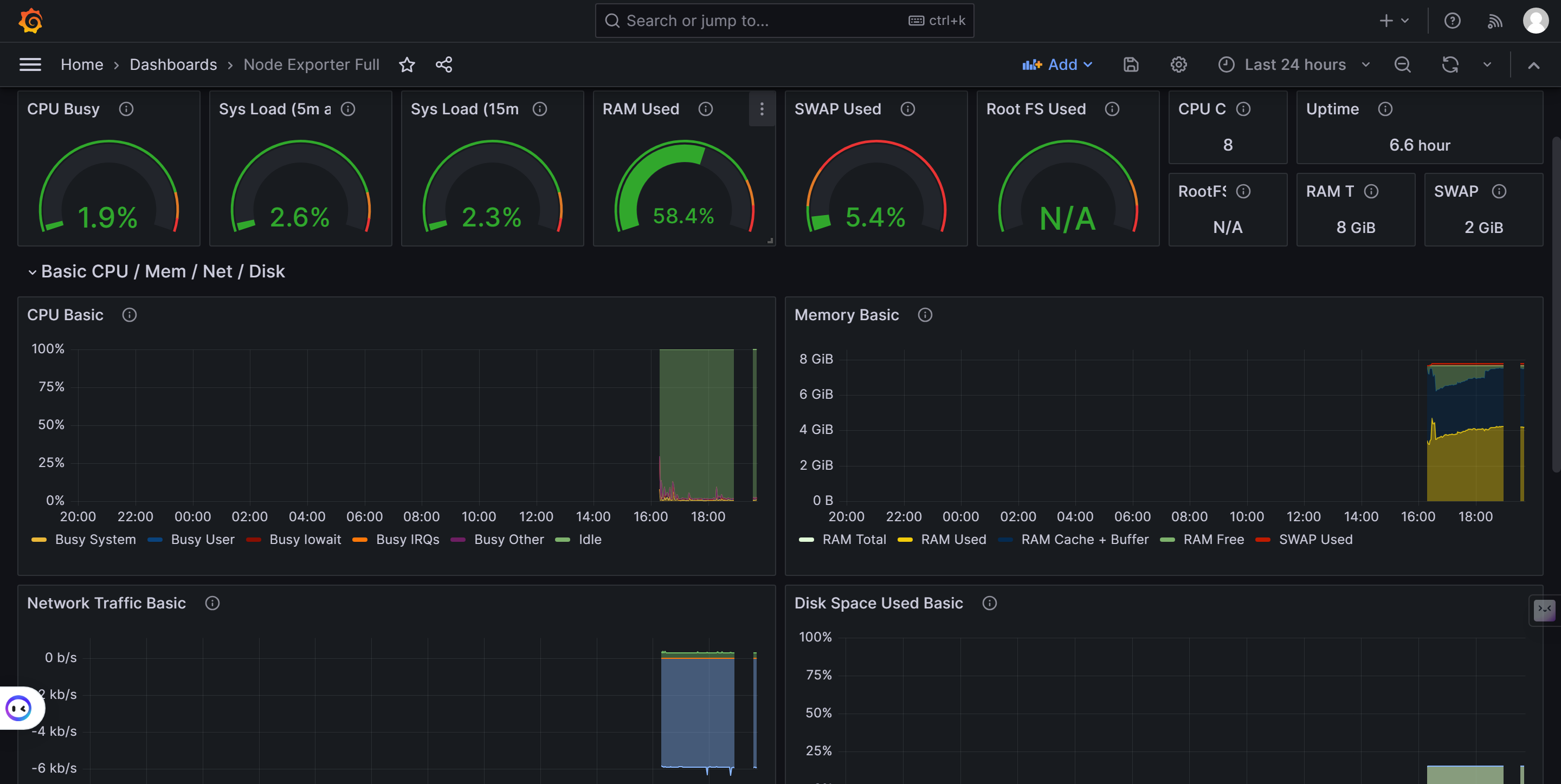

Importing Grafana Dashboards is a straightforward process and is applicable to OpenIM Server application services and Node Exporter. Here are detailed steps and necessary considerations:

|

||||

|

||||

**Key Metrics Overview and Deployment Steps**

|

||||

|

||||

To monitor OpenIM in Grafana, you need to focus on three categories of key metrics, each with its specific deployment and configuration steps:

|

||||

|

||||

1. **OpenIM Metrics (`prometheus-dashboard.yaml`)**:

|

||||

+ **Configuration File Path**: Located at `config/prometheus-dashboard.yaml`.

|

||||

+ **Enabling Monitoring**: Set the environment variable `export PROMETHEUS_ENABLE=true` to enable Prometheus monitoring.

|

||||

+ **More Information**: Refer to the [OpenIM Configuration Guide](https://docs.openim.io/configurations/prometheus-integration).

|

||||

2. **Node Exporter**:

|

||||

+ **Container Deployment**: Deploy the `quay.io/prometheus/node-exporter` container for node monitoring.

|

||||

+ **Get Dashboard**: Access the [Node Exporter Full Feature Dashboard](https://grafana.com/grafana/dashboards/1860-node-exporter-full/) and import it using YAML file download or ID import.

|

||||

+ **Deployment Guide**: Refer to the [Node Exporter Deployment Documentation](https://prometheus.io/docs/guides/node-exporter/).

|

||||

3. **Middleware Metrics**: Each middleware requires specific steps and configurations to enable monitoring. Here is a list of common middleware and links to their respective setup guides:

|

||||

+ MySQL:

|

||||

+ **Configuration**: Ensure MySQL has performance monitoring enabled.

|

||||

+ **Link**: Refer to the [MySQL Monitoring Configuration Guide](https://grafana.com/docs/grafana/latest/datasources/mysql/).

|

||||

+ Redis:

|

||||

+ **Configuration**: Configure Redis to allow monitoring data export.

|

||||

+ **Link**: Refer to the [Redis Monitoring Guide](https://grafana.com/docs/grafana/latest/datasources/redis/).

|

||||

+ MongoDB:

|

||||

+ **Configuration**: Set up monitoring metrics for MongoDB.

|

||||

+ **Link**: Refer to the [MongoDB Monitoring Guide](https://grafana.com/grafana/plugins/grafana-mongodb-datasource/).

|

||||

+ Kafka:

|

||||

+ **Configuration**: Integrate Kafka with Prometheus monitoring.

|

||||

+ **Link**: Refer to the [Kafka Monitoring Guide](https://grafana.com/grafana/plugins/grafana-kafka-datasource/).

|

||||

+ Zookeeper:

|

||||

+ **Configuration**: Ensure Zookeeper can be monitored by Prometheus.

|

||||

+ **Link**: Refer to the [Zookeeper Monitoring Configuration](https://grafana.com/docs/grafana/latest/datasources/zookeeper/).

|

||||

|

||||

|

||||

|

||||

**Importing Steps**:

|

||||

|

||||

1. Access the Dashboard Import Interface:

|

||||

|

||||

+ Click the `+` icon on the left menu or in the top right corner of Grafana, then select "Create."

|

||||

+ Choose "Import" to access the dashboard import interface.

|

||||

|

||||

2. **Perform Dashboard Import**:

|

||||

+ **Upload via File**: Directly upload your YAML file.

|

||||

+ **Paste Content**: Open the YAML file, copy its content, and paste it into the import interface.

|

||||

+ **Import via Grafana.com Dashboard**: Visit [Grafana Dashboards](https://grafana.com/grafana/dashboards/), search for the desired dashboard, and import it using its ID.

|

||||

3. **Configure the Dashboard**:

|

||||

+ Select the appropriate data source, such as the previously configured Prometheus.

|

||||

+ Adjust other settings, such as the dashboard name or folder.

|

||||

4. **Save and View the Dashboard**:

|

||||

+ After configuring, click "Import" to complete the process.

|

||||

+ Immediately view the new dashboard after successful import.

|

||||

|

||||

**Graph Examples:**

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### 1.3. <a name='MonitoringRunninginDockerGuide'></a>Monitoring Running in Docker Guide

|

||||

|

||||

#### 1.3.1. <a name='Introduction'></a>Introduction

|

||||

|

||||

This guide provides the steps to run OpenIM using Docker. OpenIM is an open-source instant messaging solution that can be quickly deployed using Docker. For more information, please refer to the [OpenIM Docker GitHub](https://github.com/openimsdk/openim-docker).

|

||||

|

||||

#### 1.3.2. <a name='Prerequisites'></a>Prerequisites

|

||||

|

||||

+ Ensure that Docker and Docker Compose are installed.

|

||||

+ Basic understanding of Docker and containerization technology.

|

||||

|

||||

#### 1.3.3. <a name='Step1:ClonetheRepository'></a>Step 1: Clone the Repository

|

||||

|

||||

First, clone the OpenIM Docker repository:

|

||||

|

||||

```bash

|

||||

git clone https://github.com/openimsdk/openim-docker.git

|

||||

```

|

||||

|

||||

Navigate to the repository directory and check the `README` file for more information and configuration options.

|

||||

|

||||

#### 1.3.4. <a name='Step2:StartDockerCompose'></a>Step 2: Start Docker Compose

|

||||

|

||||

In the repository directory, run the following command to start the service:

|

||||

|

||||

```bash

|

||||

docker-compose up -d

|

||||

```

|

||||

|

||||

This will download the required Docker images and start the OpenIM service.

|

||||

|

||||

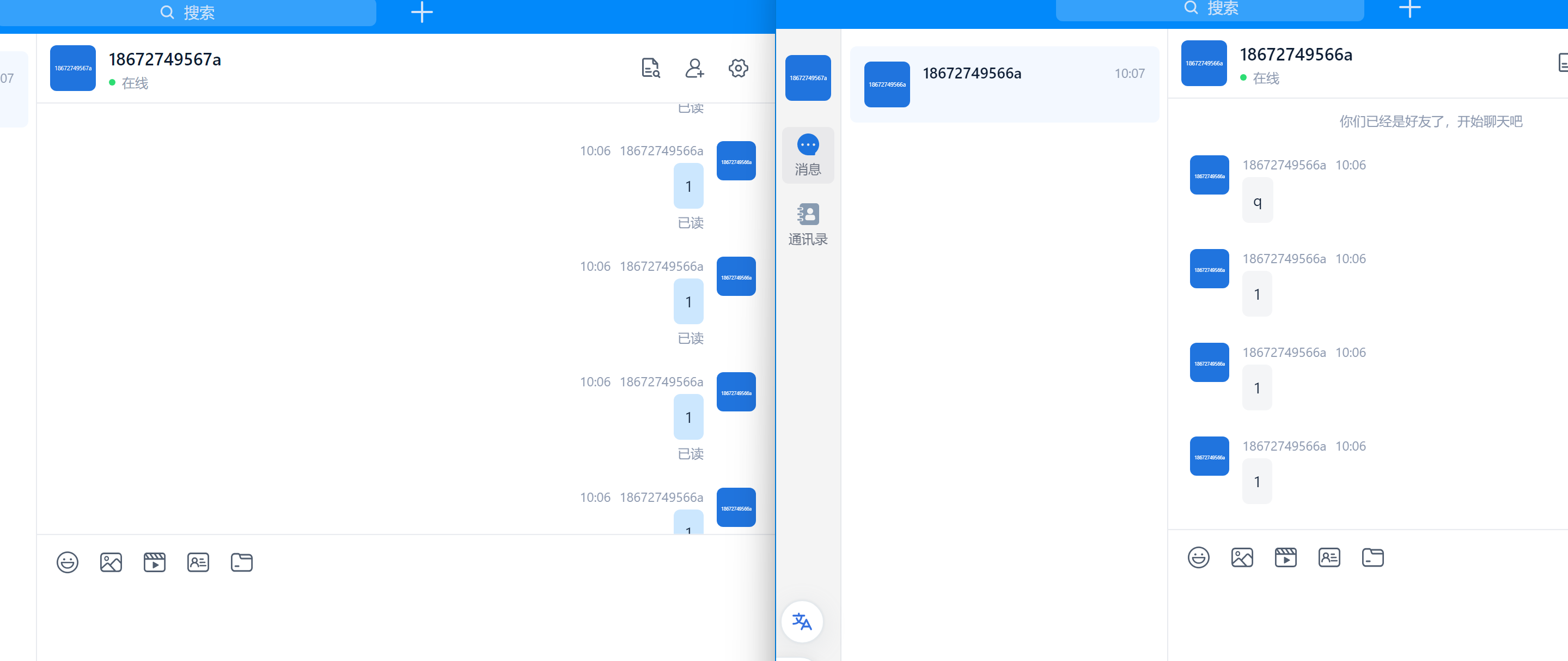

#### 1.3.5. <a name='Step3:UsetheOpenIMWebInterface'></a>Step 3: Use the OpenIM Web Interface

|

||||

|

||||

+ Open a browser in private mode and access [OpenIM Web](http://localhost:11001/).

|

||||

+ Register two users and try adding friends.

|

||||

+ Test sending messages and pictures.

|

||||

|

||||

#### 1.3.6. <a name='RunningEffect'></a>Running Effect

|

||||

|

||||

|

||||

|

||||

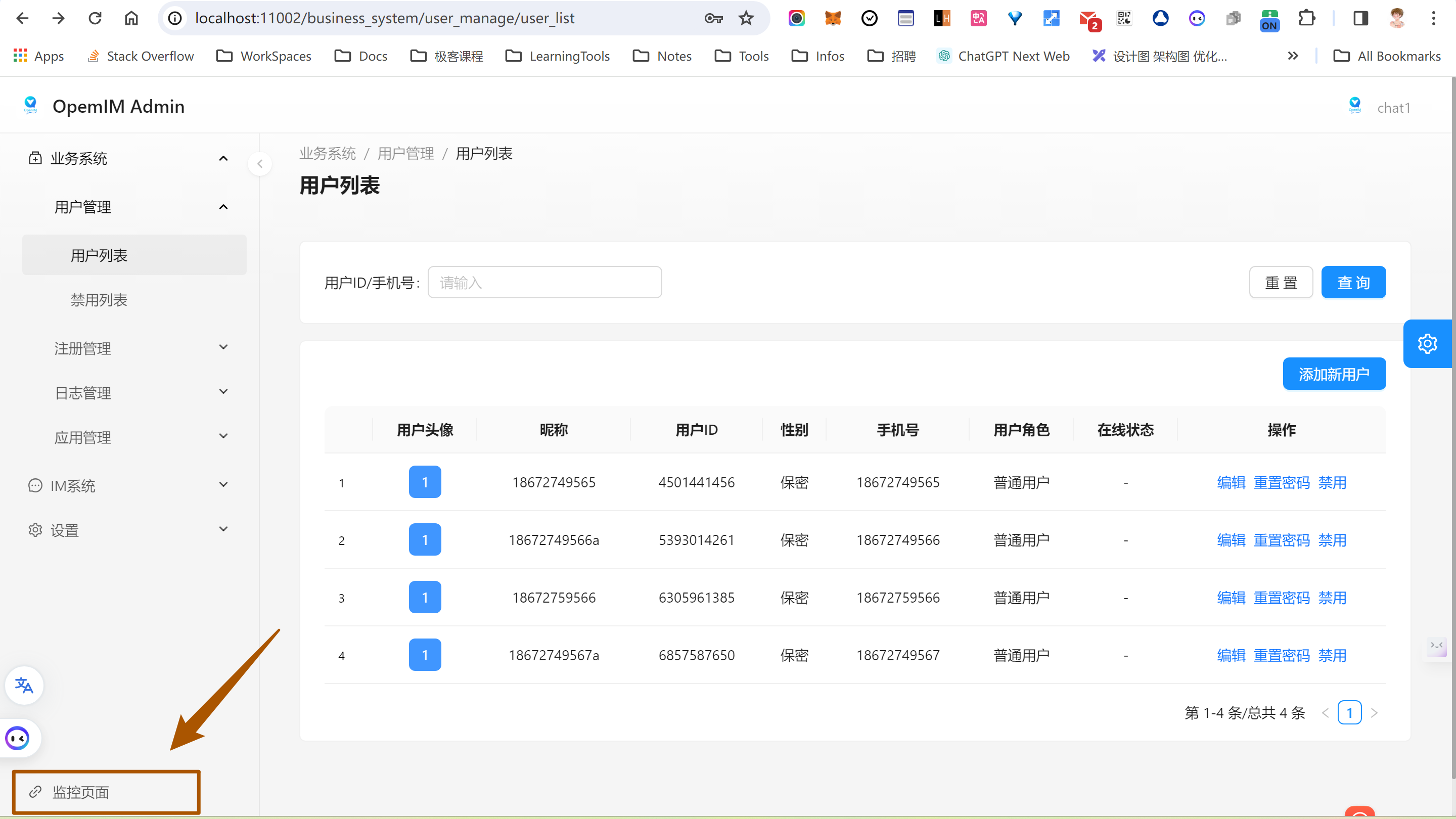

#### 1.3.7. <a name='Step4:AccesstheAdminPanel'></a>Step 4: Access the Admin Panel

|

||||

|

||||

+ Access the [OpenIM Admin Panel](http://localhost:11002/).

|

||||

+ Log in using the default username and password (`admin1:admin1`).

|

||||

|

||||

Running Effect Image:

|

||||

|

||||

|

||||

|

||||

#### 1.3.8. <a name='Step5:AccesstheMonitoringInterface'></a>Step 5: Access the Monitoring Interface

|

||||

|

||||

+ Log in to the [Monitoring Interface](http://localhost:3000/login) using the credentials (`admin:admin`).

|

||||

|

||||

#### 1.3.9. <a name='NextSteps'></a>Next Steps

|

||||

|

||||

+ Configure and manage the services following the steps provided in the OpenIM source code.

|

||||

+ Refer to the `README` file for advanced configuration and management.

|

||||

|

||||

#### 1.3.10. <a name='Troubleshooting'></a>Troubleshooting

|

||||

|

||||

+ If you encounter any issues, please check the documentation on [OpenIM Docker GitHub](https://github.com/openimsdk/openim-docker) or search for related issues in the Issues section.

|

||||

+ If the problem persists, you can create an issue on the [openim-docker](https://github.com/openimsdk/openim-docker/issues/new/choose) repository or the [openim-server](https://github.com/openimsdk/open-im-server/issues/new/choose) repository.

|

||||

|

||||

|

||||

|

||||

## 2. <a name='Kubernetes'></a>Kubernetes

|

||||

|

||||

Refer to [openimsdk/helm-charts](https://github.com/openimsdk/helm-charts).

|

||||

|

||||

When deploying and monitoring OpenIM in a Kubernetes environment, you will focus on three main metrics: middleware, custom OpenIM metrics, and Node Exporter. Here are detailed steps and guidelines:

|

||||

|

||||

### 2.1. <a name='MiddlewareMonitoring'></a>Middleware Monitoring

|

||||

|

||||

Middleware monitoring is crucial to ensure the overall system's stability. Typically, this includes monitoring the following components:

|

||||

|

||||

+ **MySQL**: Monitor database performance, query latency, and more.

|

||||

+ **Redis**: Track operation latency, memory usage, and more.

|

||||

+ **MongoDB**: Observe database operations, resource usage, and more.

|

||||

+ **Kafka**: Monitor message throughput, latency, and more.

|

||||

+ **Zookeeper**: Keep an eye on cluster status, performance metrics, and more.

|

||||

|

||||

For Kubernetes environments, you can use the corresponding Prometheus Exporters to collect monitoring data for these middleware components.

|

||||

|

||||

### 2.2. <a name='CustomOpenIMMetrics'></a>Custom OpenIM Metrics

|

||||

|

||||

Custom OpenIM metrics provide essential information about the OpenIM application itself, such as user activity, message traffic, system performance, and more. To monitor these metrics in Kubernetes:

|

||||

|

||||

+ Ensure OpenIM application configurations expose Prometheus metrics.

|

||||

+ When deploying using Helm charts (refer to [OpenIM Helm Charts](https://github.com/openimsdk/helm-charts)), pay attention to configuring relevant monitoring settings.

|

||||

|

||||

### 2.3. <a name='NodeExporter'></a>Node Exporter

|

||||

|

||||

Node Exporter is used to collect hardware and operating system-level metrics for Kubernetes nodes, such as CPU, memory, disk usage, and more. To integrate Node Exporter in Kubernetes:

|

||||

|

||||

+ Deploy Node Exporter using the appropriate Helm chart. You can find information and guides on [Prometheus Community](https://prometheus.io/docs/guides/node-exporter/).

|

||||

+ Ensure Node Exporter's data is collected by Prometheus instances within your cluster.

|

||||

|

||||

|

||||

|

||||

## 3. <a name='SettingUpandConfiguringAlertManagerUsingEnvironmentVariablesandmakeinit'></a>Setting Up and Configuring AlertManager Using Environment Variables and `make init`

|

||||

|

||||

### 3.1. <a name='Introduction-1'></a>Introduction

|

||||

|

||||

AlertManager, a component of the Prometheus monitoring system, handles alerts sent by client applications such as the Prometheus server. It takes care of deduplicating, grouping, and routing them to the correct receiver. This document outlines how to set up and configure AlertManager using environment variables and the `make init` command. We will focus on configuring key fields like the sender's email, SMTP settings, and SMTP authentication password.

|

||||

|

||||

### 3.2. <a name='Prerequisites-1'></a>Prerequisites

|

||||

|

||||

+ Basic knowledge of terminal and command-line operations.

|

||||

+ AlertManager installed on your system.

|

||||

+ Access to an SMTP server for sending emails.

|

||||

|

||||

### 3.3. <a name='ConfigurationSteps'></a>Configuration Steps

|

||||

|

||||

#### 3.3.1. <a name='ExportingEnvironmentVariables'></a>Exporting Environment Variables

|

||||

|

||||

Before initializing AlertManager, you need to set environment variables. These variables are used to configure the AlertManager settings without altering the code. Use the `export` command in your terminal. Here are some key variables you might set:

|

||||

|

||||

+ `export ALERTMANAGER_RESOLVE_TIMEOUT='5m'`

|

||||

+ `export ALERTMANAGER_SMTP_FROM='alert@example.com'`

|

||||

+ `export ALERTMANAGER_SMTP_SMARTHOST='smtp.example.com:465'`

|

||||

+ `export ALERTMANAGER_SMTP_AUTH_USERNAME='alert@example.com'`

|

||||

+ `export ALERTMANAGER_SMTP_AUTH_PASSWORD='your_password'`

|

||||

+ `export ALERTMANAGER_SMTP_REQUIRE_TLS='false'`

|

||||

|

||||

#### 3.3.2. <a name='InitializingAlertManager'></a>Initializing AlertManager

|

||||

|

||||

After setting the necessary environment variables, you can initialize AlertManager by running the `make init` command. This command typically runs a script that prepares AlertManager with the provided configuration.

|

||||

|

||||

#### 3.3.3. <a name='KeyConfigurationFields'></a>Key Configuration Fields

|

||||

|

||||

##### a. Sender's Email (`ALERTMANAGER_SMTP_FROM`)

|

||||

|

||||

This variable sets the email address that will appear as the sender in the notifications sent by AlertManager.

|

||||

|

||||

##### b. SMTP Configuration

|

||||

|

||||

+ **SMTP Server (`ALERTMANAGER_SMTP_SMARTHOST`):** Specifies the address and port of the SMTP server used for sending emails.

|

||||

+ **SMTP Authentication Username (`ALERTMANAGER_SMTP_AUTH_USERNAME`):** The username for authenticating with the SMTP server.

|

||||

+ **SMTP Authentication Password (`ALERTMANAGER_SMTP_AUTH_PASSWORD`):** The password for SMTP server authentication. It's crucial to keep this value secure.

|

||||

|

||||

#### 3.3.4. <a name='ConfiguringSMTPAuthenticationPassword'></a>Configuring SMTP Authentication Password

|

||||

|

||||

The SMTP authentication password can be set using the `ALERTMANAGER_SMTP_AUTH_PASSWORD` environment variable. It's recommended to use a secure method to set this variable to avoid exposing sensitive information. For instance, you might read the password from a secure file or a secret management tool.

|

||||

|

||||

#### 3.3.5. <a name='UsefulLinksforCommonEmailServers'></a>Useful Links for Common Email Servers

|

||||

|

||||

For specific configurations related to common email servers, you may refer to their respective documentation:

|

||||

|

||||

+ Gmail SMTP Settings:

|

||||

+ [Gmail SMTP Configuration](https://support.google.com/mail/answer/7126229?hl=en)

|

||||

+ Microsoft Outlook SMTP Settings:

|

||||

+ [Outlook Email Settings](https://support.microsoft.com/en-us/office/pop-imap-and-smtp-settings-8361e398-8af4-4e97-b147-6c6c4ac95353)

|

||||

+ Yahoo Mail SMTP Settings:

|

||||

+ [Yahoo SMTP Configuration](https://help.yahoo.com/kb/SLN4724.html)

|

||||

|

||||

### 3.4. <a name='Conclusion'></a>Conclusion

|

||||

|

||||

Setting up and configuring AlertManager with environment variables provides a flexible and secure way to manage alert settings. By following the above steps, you can easily configure AlertManager for your monitoring needs. Always ensure to secure sensitive information, especially when dealing with SMTP authentication credentials.

|

||||

Binary file not shown.

|

Before Width: | Height: | Size: 144 KiB After Width: | Height: | Size: 118 KiB |

@ -17,22 +17,19 @@ package msggateway

|

||||

import (

|

||||

"context"

|

||||

|

||||

"github.com/OpenIMSDK/tools/mcontext"

|

||||

|

||||

"github.com/openimsdk/open-im-server/v3/pkg/authverify"

|

||||

|

||||

"github.com/OpenIMSDK/tools/errs"

|

||||

"google.golang.org/grpc"

|

||||

|

||||

"github.com/openimsdk/open-im-server/v3/pkg/common/db/cache"

|

||||

|

||||

"github.com/OpenIMSDK/protocol/constant"

|

||||

"github.com/OpenIMSDK/protocol/msggateway"

|

||||

"github.com/OpenIMSDK/tools/discoveryregistry"

|

||||

"github.com/OpenIMSDK/tools/errs"

|

||||

"github.com/OpenIMSDK/tools/log"

|

||||

"github.com/OpenIMSDK/tools/mcontext"

|

||||

"github.com/OpenIMSDK/tools/utils"

|

||||

|

||||

"github.com/openimsdk/open-im-server/v3/pkg/authverify"

|

||||

"github.com/openimsdk/open-im-server/v3/pkg/common/config"

|

||||

"github.com/openimsdk/open-im-server/v3/pkg/common/db/cache"

|

||||

"github.com/openimsdk/open-im-server/v3/pkg/common/startrpc"

|

||||

)

|

||||

|

||||

@ -41,6 +38,7 @@ func (s *Server) InitServer(disCov discoveryregistry.SvcDiscoveryRegistry, serve

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

msgModel := cache.NewMsgCacheModel(rdb)

|

||||

s.LongConnServer.SetDiscoveryRegistry(disCov)

|

||||

s.LongConnServer.SetCacheHandler(msgModel)

|

||||

@ -97,22 +95,25 @@ func (s *Server) GetUsersOnlineStatus(

|

||||

if !ok {

|

||||

continue

|

||||

}

|

||||

temp := new(msggateway.GetUsersOnlineStatusResp_SuccessResult)

|

||||

temp.UserID = userID

|

||||

|

||||

uresp := new(msggateway.GetUsersOnlineStatusResp_SuccessResult)

|

||||

uresp.UserID = userID

|

||||

for _, client := range clients {

|

||||

if client != nil {

|

||||

ps := new(msggateway.GetUsersOnlineStatusResp_SuccessDetail)

|

||||

ps.Platform = constant.PlatformIDToName(client.PlatformID)

|

||||

ps.Status = constant.OnlineStatus

|

||||

ps.ConnID = client.ctx.GetConnID()

|

||||

ps.Token = client.token

|

||||

ps.IsBackground = client.IsBackground

|

||||

temp.Status = constant.OnlineStatus

|

||||

temp.DetailPlatformStatus = append(temp.DetailPlatformStatus, ps)

|

||||

if client == nil {

|

||||

continue

|

||||

}

|

||||

|

||||

ps := new(msggateway.GetUsersOnlineStatusResp_SuccessDetail)

|

||||

ps.Platform = constant.PlatformIDToName(client.PlatformID)

|

||||

ps.Status = constant.OnlineStatus

|

||||

ps.ConnID = client.ctx.GetConnID()

|

||||

ps.Token = client.token

|

||||

ps.IsBackground = client.IsBackground

|

||||

uresp.Status = constant.OnlineStatus

|

||||

uresp.DetailPlatformStatus = append(uresp.DetailPlatformStatus, ps)

|

||||

}

|

||||

if temp.Status == constant.OnlineStatus {

|

||||

resp.SuccessResult = append(resp.SuccessResult, temp)

|

||||

if uresp.Status == constant.OnlineStatus {

|

||||

resp.SuccessResult = append(resp.SuccessResult, uresp)

|

||||

}

|

||||

}

|

||||

return &resp, nil

|

||||

@ -129,50 +130,55 @@ func (s *Server) SuperGroupOnlineBatchPushOneMsg(

|

||||

ctx context.Context,

|

||||

req *msggateway.OnlineBatchPushOneMsgReq,

|

||||

) (*msggateway.OnlineBatchPushOneMsgResp, error) {

|

||||

var singleUserResult []*msggateway.SingleMsgToUserResults

|

||||

|

||||

var singleUserResults []*msggateway.SingleMsgToUserResults

|

||||

|

||||

for _, v := range req.PushToUserIDs {

|

||||

var resp []*msggateway.SingleMsgToUserPlatform

|

||||

tempT := &msggateway.SingleMsgToUserResults{

|

||||

results := &msggateway.SingleMsgToUserResults{

|

||||

UserID: v,

|

||||

}

|

||||

clients, ok := s.LongConnServer.GetUserAllCons(v)

|

||||

if !ok {

|

||||

log.ZDebug(ctx, "push user not online", "userID", v)

|

||||

tempT.Resp = resp

|

||||

singleUserResult = append(singleUserResult, tempT)

|

||||

results.Resp = resp

|

||||

singleUserResults = append(singleUserResults, results)

|

||||

continue

|

||||

}

|

||||

|

||||

log.ZDebug(ctx, "push user online", "clients", clients, "userID", v)

|

||||

for _, client := range clients {

|

||||

if client != nil {

|

||||

temp := &msggateway.SingleMsgToUserPlatform{

|

||||

RecvID: v,

|

||||

RecvPlatFormID: int32(client.PlatformID),

|

||||

}

|

||||

if !client.IsBackground ||

|

||||

(client.IsBackground == true && client.PlatformID != constant.IOSPlatformID) {

|

||||

err := client.PushMessage(ctx, req.MsgData)

|

||||

if err != nil {

|

||||

temp.ResultCode = -2

|

||||

resp = append(resp, temp)

|

||||

} else {

|

||||

if utils.IsContainInt(client.PlatformID, s.pushTerminal) {

|

||||

tempT.OnlinePush = true

|

||||

resp = append(resp, temp)

|

||||

}

|

||||

}

|

||||

if client == nil {

|

||||

continue

|

||||

}

|

||||

|

||||

userPlatform := &msggateway.SingleMsgToUserPlatform{

|

||||

RecvID: v,

|

||||

RecvPlatFormID: int32(client.PlatformID),

|

||||

}

|

||||

if !client.IsBackground ||

|

||||

(client.IsBackground && client.PlatformID != constant.IOSPlatformID) {

|

||||

err := client.PushMessage(ctx, req.MsgData)

|

||||

if err != nil {

|

||||

userPlatform.ResultCode = -2

|

||||

resp = append(resp, userPlatform)

|

||||

} else {

|

||||

temp.ResultCode = -3

|

||||

resp = append(resp, temp)

|

||||

if utils.IsContainInt(client.PlatformID, s.pushTerminal) {

|

||||

results.OnlinePush = true

|

||||

resp = append(resp, userPlatform)

|

||||

}

|

||||

}

|

||||

} else {

|

||||

userPlatform.ResultCode = -3

|

||||

resp = append(resp, userPlatform)

|

||||

}

|

||||

}

|

||||

tempT.Resp = resp

|

||||

singleUserResult = append(singleUserResult, tempT)

|

||||

results.Resp = resp

|

||||

singleUserResults = append(singleUserResults, results)

|

||||

}

|

||||

|

||||

return &msggateway.OnlineBatchPushOneMsgResp{

|

||||

SinglePushResult: singleUserResult,

|

||||

SinglePushResult: singleUserResults,

|

||||

}, nil

|

||||

}

|

||||

|

||||

@ -181,17 +187,21 @@ func (s *Server) KickUserOffline(

|

||||

req *msggateway.KickUserOfflineReq,

|

||||

) (*msggateway.KickUserOfflineResp, error) {

|

||||

for _, v := range req.KickUserIDList {

|

||||

if clients, _, ok := s.LongConnServer.GetUserPlatformCons(v, int(req.PlatformID)); ok {

|

||||

for _, client := range clients {

|

||||

log.ZDebug(ctx, "kick user offline", "userID", v, "platformID", req.PlatformID, "client", client)

|

||||

if err := client.longConnServer.KickUserConn(client); err != nil {

|

||||

log.ZWarn(ctx, "kick user offline failed", err, "userID", v, "platformID", req.PlatformID)

|

||||

}

|

||||

}

|

||||

} else {

|

||||

clients, _, ok := s.LongConnServer.GetUserPlatformCons(v, int(req.PlatformID))

|

||||

if !ok {

|

||||

log.ZInfo(ctx, "conn not exist", "userID", v, "platformID", req.PlatformID)

|

||||

continue

|

||||

}

|

||||

|

||||

for _, client := range clients {

|

||||

log.ZDebug(ctx, "kick user offline", "userID", v, "platformID", req.PlatformID, "client", client)

|

||||

if err := client.longConnServer.KickUserConn(client); err != nil {

|

||||

log.ZWarn(ctx, "kick user offline failed", err, "userID", v, "platformID", req.PlatformID)

|

||||

}

|

||||

}

|

||||

continue

|

||||

}

|

||||

|

||||

return &msggateway.KickUserOfflineResp{}, nil

|

||||

}

|

||||

|

||||

|

||||

@ -374,7 +374,7 @@ func (ws *WsServer) unregisterClient(client *Client) {

|

||||

}

|

||||

ws.onlineUserConnNum.Add(-1)

|

||||

ws.SetUserOnlineStatus(client.ctx, client, constant.Offline)

|

||||

log.ZInfo(client.ctx, "user offline", "close reason", client.closedErr, "online user Num", ws.onlineUserNum, "online user conn Num",

|

||||

log.ZInfo(client.ctx, "user offline", "close reason", client.closedErr, "online user Num", ws.onlineUserNum.Load(), "online user conn Num",

|

||||