Compare commits

No commits in common. "master" and "v0.1.8" have entirely different histories.

45

.github/workflows/ci.yml

vendored

@ -1,45 +0,0 @@

|

|||||||

name: CI

|

|

||||||

on:

|

|

||||||

- push

|

|

||||||

- pull_request

|

|

||||||

jobs:

|

|

||||||

test:

|

|

||||||

runs-on: ubuntu-20.04

|

|

||||||

strategy:

|

|

||||||

fail-fast: false

|

|

||||||

matrix:

|

|

||||||

python-version:

|

|

||||||

- "2.7"

|

|

||||||

- "3.5"

|

|

||||||

- "3.6"

|

|

||||||

- "3.7"

|

|

||||||

- "3.8"

|

|

||||||

- "3.9"

|

|

||||||

- "3.10"

|

|

||||||

steps:

|

|

||||||

- uses: actions/checkout@v3

|

|

||||||

- name: Set up Python ${{ matrix.python-version }}

|

|

||||||

uses: actions/setup-python@v4

|

|

||||||

with:

|

|

||||||

python-version: ${{ matrix.python-version }}

|

|

||||||

- name: Install ffmpeg

|

|

||||||

run: |

|

|

||||||

sudo apt update

|

|

||||||

sudo apt install ffmpeg

|

|

||||||

- name: Setup pip + tox

|

|

||||||

run: |

|

|

||||||

python -m pip install --upgrade \

|

|

||||||

"pip==20.3.4; python_version < '3.6'" \

|

|

||||||

"pip==21.3.1; python_version >= '3.6'"

|

|

||||||

python -m pip install tox==3.24.5 tox-gh-actions==2.9.1

|

|

||||||

- name: Test with tox

|

|

||||||

run: tox

|

|

||||||

black:

|

|

||||||

runs-on: ubuntu-20.04

|

|

||||||

steps:

|

|

||||||

- uses: actions/checkout@v3

|

|

||||||

- name: Black

|

|

||||||

run: |

|

|

||||||

# TODO: use standard `psf/black` action after dropping Python 2 support.

|

|

||||||

pip install black==21.12b0 click==8.0.2 # https://stackoverflow.com/questions/71673404

|

|

||||||

black ffmpeg --check --color --diff

|

|

||||||

1

.gitignore

vendored

@ -5,4 +5,3 @@ dist/

|

|||||||

ffmpeg/tests/sample_data/out*.mp4

|

ffmpeg/tests/sample_data/out*.mp4

|

||||||

ffmpeg_python.egg-info/

|

ffmpeg_python.egg-info/

|

||||||

venv*

|

venv*

|

||||||

build/

|

|

||||||

|

|||||||

37

.travis.yml

Normal file

@ -0,0 +1,37 @@

|

|||||||

|

language: python

|

||||||

|

before_install:

|

||||||

|

- >

|

||||||

|

[ -f ffmpeg-release/ffmpeg ] || (

|

||||||

|

curl -O https://johnvansickle.com/ffmpeg/releases/ffmpeg-release-64bit-static.tar.xz &&

|

||||||

|

mkdir -p ffmpeg-release &&

|

||||||

|

tar Jxf ffmpeg-release-64bit-static.tar.xz --strip-components=1 -C ffmpeg-release

|

||||||

|

)

|

||||||

|

matrix:

|

||||||

|

include:

|

||||||

|

- python: 2.7

|

||||||

|

env:

|

||||||

|

- TOX_ENV=py27

|

||||||

|

- python: 3.3

|

||||||

|

env:

|

||||||

|

- TOX_ENV=py33

|

||||||

|

- python: 3.4

|

||||||

|

env:

|

||||||

|

- TOX_ENV=py34

|

||||||

|

- python: 3.5

|

||||||

|

env:

|

||||||

|

- TOX_ENV=py35

|

||||||

|

- python: 3.6

|

||||||

|

env:

|

||||||

|

- TOX_ENV=py36

|

||||||

|

- python: pypy

|

||||||

|

env:

|

||||||

|

- TOX_ENV=pypy

|

||||||

|

install:

|

||||||

|

- pip install tox

|

||||||

|

script:

|

||||||

|

- export PATH=$(readlink -f ffmpeg-release):$PATH

|

||||||

|

- tox -e $TOX_ENV

|

||||||

|

cache:

|

||||||

|

directories:

|

||||||

|

- .tox

|

||||||

|

- ffmpeg-release

|

||||||

208

LICENSE

@ -1,201 +1,13 @@

|

|||||||

Apache License

|

Copyright 2017 Karl Kroening

|

||||||

Version 2.0, January 2004

|

|

||||||

http://www.apache.org/licenses/

|

|

||||||

|

|

||||||

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

Licensed under the Apache License, Version 2.0 (the "License");

|

||||||

|

you may not use this file except in compliance with the License.

|

||||||

|

You may obtain a copy of the License at

|

||||||

|

|

||||||

1. Definitions.

|

http://www.apache.org/licenses/LICENSE-2.0

|

||||||

|

|

||||||

"License" shall mean the terms and conditions for use, reproduction,

|

Unless required by applicable law or agreed to in writing, software

|

||||||

and distribution as defined by Sections 1 through 9 of this document.

|

distributed under the License is distributed on an "AS IS" BASIS,

|

||||||

|

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||||

"Licensor" shall mean the copyright owner or entity authorized by

|

See the License for the specific language governing permissions and

|

||||||

the copyright owner that is granting the License.

|

limitations under the License.

|

||||||

|

|

||||||

"Legal Entity" shall mean the union of the acting entity and all

|

|

||||||

other entities that control, are controlled by, or are under common

|

|

||||||

control with that entity. For the purposes of this definition,

|

|

||||||

"control" means (i) the power, direct or indirect, to cause the

|

|

||||||

direction or management of such entity, whether by contract or

|

|

||||||

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

|

||||||

outstanding shares, or (iii) beneficial ownership of such entity.

|

|

||||||

|

|

||||||

"You" (or "Your") shall mean an individual or Legal Entity

|

|

||||||

exercising permissions granted by this License.

|

|

||||||

|

|

||||||

"Source" form shall mean the preferred form for making modifications,

|

|

||||||

including but not limited to software source code, documentation

|

|

||||||

source, and configuration files.

|

|

||||||

|

|

||||||

"Object" form shall mean any form resulting from mechanical

|

|

||||||

transformation or translation of a Source form, including but

|

|

||||||

not limited to compiled object code, generated documentation,

|

|

||||||

and conversions to other media types.

|

|

||||||

|

|

||||||

"Work" shall mean the work of authorship, whether in Source or

|

|

||||||

Object form, made available under the License, as indicated by a

|

|

||||||

copyright notice that is included in or attached to the work

|

|

||||||

(an example is provided in the Appendix below).

|

|

||||||

|

|

||||||

"Derivative Works" shall mean any work, whether in Source or Object

|

|

||||||

form, that is based on (or derived from) the Work and for which the

|

|

||||||

editorial revisions, annotations, elaborations, or other modifications

|

|

||||||

represent, as a whole, an original work of authorship. For the purposes

|

|

||||||

of this License, Derivative Works shall not include works that remain

|

|

||||||

separable from, or merely link (or bind by name) to the interfaces of,

|

|

||||||

the Work and Derivative Works thereof.

|

|

||||||

|

|

||||||

"Contribution" shall mean any work of authorship, including

|

|

||||||

the original version of the Work and any modifications or additions

|

|

||||||

to that Work or Derivative Works thereof, that is intentionally

|

|

||||||

submitted to Licensor for inclusion in the Work by the copyright owner

|

|

||||||

or by an individual or Legal Entity authorized to submit on behalf of

|

|

||||||

the copyright owner. For the purposes of this definition, "submitted"

|

|

||||||

means any form of electronic, verbal, or written communication sent

|

|

||||||

to the Licensor or its representatives, including but not limited to

|

|

||||||

communication on electronic mailing lists, source code control systems,

|

|

||||||

and issue tracking systems that are managed by, or on behalf of, the

|

|

||||||

Licensor for the purpose of discussing and improving the Work, but

|

|

||||||

excluding communication that is conspicuously marked or otherwise

|

|

||||||

designated in writing by the copyright owner as "Not a Contribution."

|

|

||||||

|

|

||||||

"Contributor" shall mean Licensor and any individual or Legal Entity

|

|

||||||

on behalf of whom a Contribution has been received by Licensor and

|

|

||||||

subsequently incorporated within the Work.

|

|

||||||

|

|

||||||

2. Grant of Copyright License. Subject to the terms and conditions of

|

|

||||||

this License, each Contributor hereby grants to You a perpetual,

|

|

||||||

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

|

||||||

copyright license to reproduce, prepare Derivative Works of,

|

|

||||||

publicly display, publicly perform, sublicense, and distribute the

|

|

||||||

Work and such Derivative Works in Source or Object form.

|

|

||||||

|

|

||||||

3. Grant of Patent License. Subject to the terms and conditions of

|

|

||||||

this License, each Contributor hereby grants to You a perpetual,

|

|

||||||

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

|

||||||

(except as stated in this section) patent license to make, have made,

|

|

||||||

use, offer to sell, sell, import, and otherwise transfer the Work,

|

|

||||||

where such license applies only to those patent claims licensable

|

|

||||||

by such Contributor that are necessarily infringed by their

|

|

||||||

Contribution(s) alone or by combination of their Contribution(s)

|

|

||||||

with the Work to which such Contribution(s) was submitted. If You

|

|

||||||

institute patent litigation against any entity (including a

|

|

||||||

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

|

||||||

or a Contribution incorporated within the Work constitutes direct

|

|

||||||

or contributory patent infringement, then any patent licenses

|

|

||||||

granted to You under this License for that Work shall terminate

|

|

||||||

as of the date such litigation is filed.

|

|

||||||

|

|

||||||

4. Redistribution. You may reproduce and distribute copies of the

|

|

||||||

Work or Derivative Works thereof in any medium, with or without

|

|

||||||

modifications, and in Source or Object form, provided that You

|

|

||||||

meet the following conditions:

|

|

||||||

|

|

||||||

(a) You must give any other recipients of the Work or

|

|

||||||

Derivative Works a copy of this License; and

|

|

||||||

|

|

||||||

(b) You must cause any modified files to carry prominent notices

|

|

||||||

stating that You changed the files; and

|

|

||||||

|

|

||||||

(c) You must retain, in the Source form of any Derivative Works

|

|

||||||

that You distribute, all copyright, patent, trademark, and

|

|

||||||

attribution notices from the Source form of the Work,

|

|

||||||

excluding those notices that do not pertain to any part of

|

|

||||||

the Derivative Works; and

|

|

||||||

|

|

||||||

(d) If the Work includes a "NOTICE" text file as part of its

|

|

||||||

distribution, then any Derivative Works that You distribute must

|

|

||||||

include a readable copy of the attribution notices contained

|

|

||||||

within such NOTICE file, excluding those notices that do not

|

|

||||||

pertain to any part of the Derivative Works, in at least one

|

|

||||||

of the following places: within a NOTICE text file distributed

|

|

||||||

as part of the Derivative Works; within the Source form or

|

|

||||||

documentation, if provided along with the Derivative Works; or,

|

|

||||||

within a display generated by the Derivative Works, if and

|

|

||||||

wherever such third-party notices normally appear. The contents

|

|

||||||

of the NOTICE file are for informational purposes only and

|

|

||||||

do not modify the License. You may add Your own attribution

|

|

||||||

notices within Derivative Works that You distribute, alongside

|

|

||||||

or as an addendum to the NOTICE text from the Work, provided

|

|

||||||

that such additional attribution notices cannot be construed

|

|

||||||

as modifying the License.

|

|

||||||

|

|

||||||

You may add Your own copyright statement to Your modifications and

|

|

||||||

may provide additional or different license terms and conditions

|

|

||||||

for use, reproduction, or distribution of Your modifications, or

|

|

||||||

for any such Derivative Works as a whole, provided Your use,

|

|

||||||

reproduction, and distribution of the Work otherwise complies with

|

|

||||||

the conditions stated in this License.

|

|

||||||

|

|

||||||

5. Submission of Contributions. Unless You explicitly state otherwise,

|

|

||||||

any Contribution intentionally submitted for inclusion in the Work

|

|

||||||

by You to the Licensor shall be under the terms and conditions of

|

|

||||||

this License, without any additional terms or conditions.

|

|

||||||

Notwithstanding the above, nothing herein shall supersede or modify

|

|

||||||

the terms of any separate license agreement you may have executed

|

|

||||||

with Licensor regarding such Contributions.

|

|

||||||

|

|

||||||

6. Trademarks. This License does not grant permission to use the trade

|

|

||||||

names, trademarks, service marks, or product names of the Licensor,

|

|

||||||

except as required for reasonable and customary use in describing the

|

|

||||||

origin of the Work and reproducing the content of the NOTICE file.

|

|

||||||

|

|

||||||

7. Disclaimer of Warranty. Unless required by applicable law or

|

|

||||||

agreed to in writing, Licensor provides the Work (and each

|

|

||||||

Contributor provides its Contributions) on an "AS IS" BASIS,

|

|

||||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

|

||||||

implied, including, without limitation, any warranties or conditions

|

|

||||||

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

|

||||||

PARTICULAR PURPOSE. You are solely responsible for determining the

|

|

||||||

appropriateness of using or redistributing the Work and assume any

|

|

||||||

risks associated with Your exercise of permissions under this License.

|

|

||||||

|

|

||||||

8. Limitation of Liability. In no event and under no legal theory,

|

|

||||||

whether in tort (including negligence), contract, or otherwise,

|

|

||||||

unless required by applicable law (such as deliberate and grossly

|

|

||||||

negligent acts) or agreed to in writing, shall any Contributor be

|

|

||||||

liable to You for damages, including any direct, indirect, special,

|

|

||||||

incidental, or consequential damages of any character arising as a

|

|

||||||

result of this License or out of the use or inability to use the

|

|

||||||

Work (including but not limited to damages for loss of goodwill,

|

|

||||||

work stoppage, computer failure or malfunction, or any and all

|

|

||||||

other commercial damages or losses), even if such Contributor

|

|

||||||

has been advised of the possibility of such damages.

|

|

||||||

|

|

||||||

9. Accepting Warranty or Additional Liability. While redistributing

|

|

||||||

the Work or Derivative Works thereof, You may choose to offer,

|

|

||||||

and charge a fee for, acceptance of support, warranty, indemnity,

|

|

||||||

or other liability obligations and/or rights consistent with this

|

|

||||||

License. However, in accepting such obligations, You may act only

|

|

||||||

on Your own behalf and on Your sole responsibility, not on behalf

|

|

||||||

of any other Contributor, and only if You agree to indemnify,

|

|

||||||

defend, and hold each Contributor harmless for any liability

|

|

||||||

incurred by, or claims asserted against, such Contributor by reason

|

|

||||||

of your accepting any such warranty or additional liability.

|

|

||||||

|

|

||||||

END OF TERMS AND CONDITIONS

|

|

||||||

|

|

||||||

APPENDIX: How to apply the Apache License to your work.

|

|

||||||

|

|

||||||

To apply the Apache License to your work, attach the following

|

|

||||||

boilerplate notice, with the fields enclosed by brackets "[]"

|

|

||||||

replaced with your own identifying information. (Don't include

|

|

||||||

the brackets!) The text should be enclosed in the appropriate

|

|

||||||

comment syntax for the file format. We also recommend that a

|

|

||||||

file or class name and description of purpose be included on the

|

|

||||||

same "printed page" as the copyright notice for easier

|

|

||||||

identification within third-party archives.

|

|

||||||

|

|

||||||

Copyright 2017 Karl Kroening

|

|

||||||

|

|

||||||

Licensed under the Apache License, Version 2.0 (the "License");

|

|

||||||

you may not use this file except in compliance with the License.

|

|

||||||

You may obtain a copy of the License at

|

|

||||||

|

|

||||||

http://www.apache.org/licenses/LICENSE-2.0

|

|

||||||

|

|

||||||

Unless required by applicable law or agreed to in writing, software

|

|

||||||

distributed under the License is distributed on an "AS IS" BASIS,

|

|

||||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

|

||||||

See the License for the specific language governing permissions and

|

|

||||||

limitations under the License.

|

|

||||||

|

|||||||

235

README.md

@ -1,21 +1,16 @@

|

|||||||

# ffmpeg-python: Python bindings for FFmpeg

|

# ffmpeg-python: Python bindings for FFmpeg

|

||||||

|

|

||||||

[![CI][ci-badge]][ci]

|

[](https://travis-ci.org/kkroening/ffmpeg-python)

|

||||||

|

|

||||||

[ci-badge]: https://github.com/kkroening/ffmpeg-python/actions/workflows/ci.yml/badge.svg

|

|

||||||

[ci]: https://github.com/kkroening/ffmpeg-python/actions/workflows/ci.yml

|

|

||||||

|

|

||||||

<img src="https://raw.githubusercontent.com/kkroening/ffmpeg-python/master/doc/formula.png" alt="ffmpeg-python logo" width="60%" />

|

|

||||||

|

|

||||||

## Overview

|

## Overview

|

||||||

|

|

||||||

There are tons of Python FFmpeg wrappers out there but they seem to lack complex filter support. `ffmpeg-python` works well for simple as well as complex signal graphs.

|

There are tons of Python FFmpeg wrappers out there but they seem to lack complex filter support. `ffmpeg-python` works well for simple as well as complex signal graphs.

|

||||||

|

|

||||||

|

|

||||||

## Quickstart

|

## Quickstart

|

||||||

|

|

||||||

Flip a video horizontally:

|

Flip a video horizontally:

|

||||||

```python

|

```

|

||||||

import ffmpeg

|

import ffmpeg

|

||||||

stream = ffmpeg.input('input.mp4')

|

stream = ffmpeg.input('input.mp4')

|

||||||

stream = ffmpeg.hflip(stream)

|

stream = ffmpeg.hflip(stream)

|

||||||

@ -24,10 +19,9 @@ ffmpeg.run(stream)

|

|||||||

```

|

```

|

||||||

|

|

||||||

Or if you prefer a fluent interface:

|

Or if you prefer a fluent interface:

|

||||||

```python

|

```

|

||||||

import ffmpeg

|

import ffmpeg

|

||||||

(

|

(ffmpeg

|

||||||

ffmpeg

|

|

||||||

.input('input.mp4')

|

.input('input.mp4')

|

||||||

.hflip()

|

.hflip()

|

||||||

.output('output.mp4')

|

.output('output.mp4')

|

||||||

@ -35,33 +29,35 @@ import ffmpeg

|

|||||||

)

|

)

|

||||||

```

|

```

|

||||||

|

|

||||||

## [API reference](https://kkroening.github.io/ffmpeg-python/)

|

|

||||||

|

|

||||||

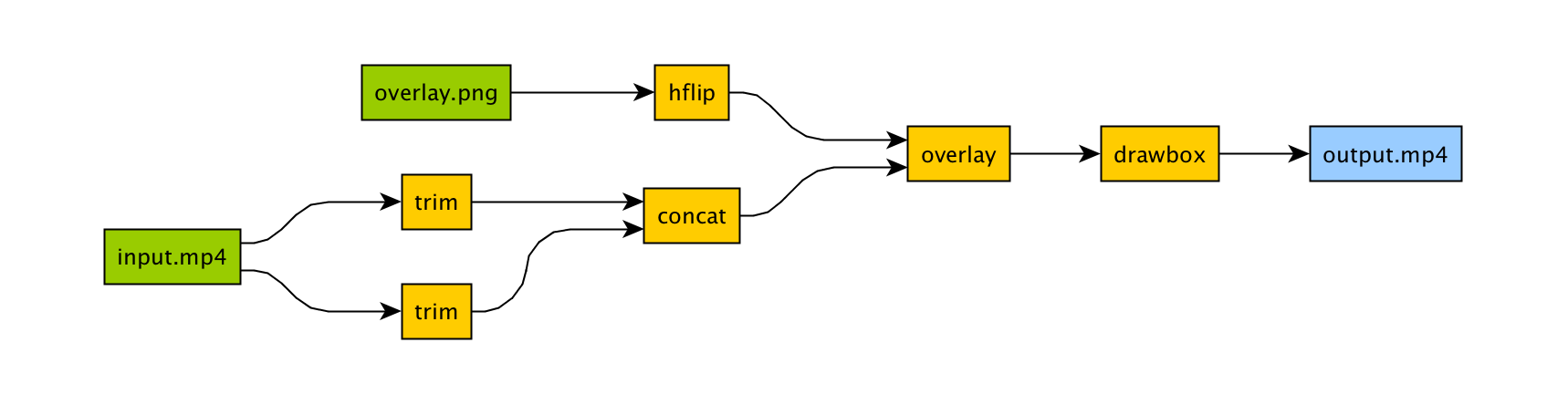

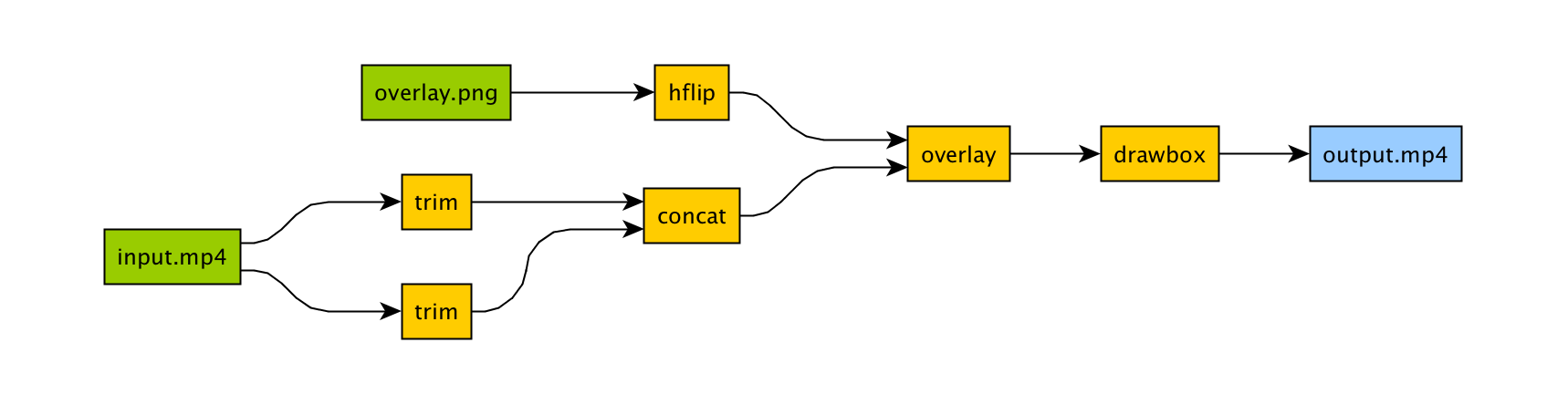

## Complex filter graphs

|

## Complex filter graphs

|

||||||

FFmpeg is extremely powerful, but its command-line interface gets really complicated rather quickly - especially when working with signal graphs and doing anything more than trivial.

|

FFmpeg is extremely powerful, but its command-line interface gets really complicated really quickly - especially when working with signal graphs and doing anything more than trivial.

|

||||||

|

|

||||||

Take for example a signal graph that looks like this:

|

Take for example a signal graph that looks like this:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

The corresponding command-line arguments are pretty gnarly:

|

The corresponding command-line arguments are pretty gnarly:

|

||||||

```bash

|

```

|

||||||

ffmpeg -i input.mp4 -i overlay.png -filter_complex "[0]trim=start_frame=10:end_frame=20[v0];\

|

ffmpeg -i input.mp4 \

|

||||||

[0]trim=start_frame=30:end_frame=40[v1];[v0][v1]concat=n=2[v2];[1]hflip[v3];\

|

-filter_complex "\

|

||||||

[v2][v3]overlay=eof_action=repeat[v4];[v4]drawbox=50:50:120:120:red:t=5[v5]"\

|

[0]trim=start_frame=10:end_frame=20[v0];\

|

||||||

-map [v5] output.mp4

|

[0]trim=start_frame=30:end_frame=40[v1];\

|

||||||

|

[v0][v1]concat=n=2[v2];\

|

||||||

|

[1]hflip[v3];\

|

||||||

|

[v2][v3]overlay=eof_action=repeat[v4];\

|

||||||

|

[v4]drawbox=50:50:120:120:red:t=5[v5]"\

|

||||||

|

-map [v5] output.mp4

|

||||||

```

|

```

|

||||||

|

|

||||||

Maybe this looks great to you, but if you're not an FFmpeg command-line expert, it probably looks alien.

|

Maybe this looks great to you, but if you're not an FFmpeg command-line expert, it probably looks alien.

|

||||||

|

|

||||||

If you're like me and find Python to be powerful and readable, it's easier with `ffmpeg-python`:

|

If you're like me and find Python to be powerful and readable, it's easy with `ffmpeg-python`:

|

||||||

```python

|

```

|

||||||

import ffmpeg

|

import ffmpeg

|

||||||

|

|

||||||

in_file = ffmpeg.input('input.mp4')

|

in_file = ffmpeg.input('input.mp4')

|

||||||

overlay_file = ffmpeg.input('overlay.png')

|

overlay_file = ffmpeg.input('overlay.png')

|

||||||

(

|

(ffmpeg

|

||||||

ffmpeg

|

|

||||||

.concat(

|

.concat(

|

||||||

in_file.trim(start_frame=10, end_frame=20),

|

in_file.trim(start_frame=10, end_frame=20),

|

||||||

in_file.trim(start_frame=30, end_frame=40),

|

in_file.trim(start_frame=30, end_frame=40),

|

||||||

@ -73,215 +69,74 @@ overlay_file = ffmpeg.input('overlay.png')

|

|||||||

)

|

)

|

||||||

```

|

```

|

||||||

|

|

||||||

`ffmpeg-python` takes care of running `ffmpeg` with the command-line arguments that correspond to the above filter diagram, in familiar Python terms.

|

`ffmpeg-python` takes care of running `ffmpeg` with the command-line arguments that correspond to the above filter diagram, and it's easy to see what's going on and make changes as needed.

|

||||||

|

|

||||||

<img src="https://raw.githubusercontent.com/kkroening/ffmpeg-python/master/doc/screenshot.png" alt="Screenshot" align="middle" width="60%" />

|

<img src="https://raw.githubusercontent.com/kkroening/ffmpeg-python/master/doc/screenshot.png" alt="Screenshot" align="middle" width="60%" />

|

||||||

|

|

||||||

Real-world signal graphs can get a heck of a lot more complex, but `ffmpeg-python` handles arbitrarily large (directed-acyclic) signal graphs.

|

Real-world signal graphs can get a heck of a lot more complex, but `ffmpeg-python` handles them with ease.

|

||||||

|

|

||||||

|

|

||||||

## Installation

|

## Installation

|

||||||

|

|

||||||

### Installing `ffmpeg-python`

|

The easiest way to acquire the latest version of `ffmpeg-python` is through pip:

|

||||||

|

|

||||||

The latest version of `ffmpeg-python` can be acquired via a typical pip install:

|

```

|

||||||

|

|

||||||

```bash

|

|

||||||

pip install ffmpeg-python

|

pip install ffmpeg-python

|

||||||

```

|

```

|

||||||

|

|

||||||

Or the source can be cloned and installed from locally:

|

It's also possible to clone the source and put it on your python path (`$PYTHONPATH`, `sys.path`, etc.):

|

||||||

```bash

|

```

|

||||||

git clone git@github.com:kkroening/ffmpeg-python.git

|

> git clone git@github.com:kkroening/ffmpeg-python.git

|

||||||

pip install -e ./ffmpeg-python

|

> export PYTHONPATH=${PYTHONPATH}:ffmpeg-python

|

||||||

|

> python

|

||||||

|

>>> import ffmpeg

|

||||||

```

|

```

|

||||||

|

|

||||||

> **Note**: `ffmpeg-python` makes no attempt to download/install FFmpeg, as `ffmpeg-python` is merely a pure-Python wrapper - whereas FFmpeg installation is platform-dependent/environment-specific, and is thus the responsibility of the user, as described below.

|

## [API Reference](https://kkroening.github.io/ffmpeg-python/)

|

||||||

|

|

||||||

### Installing FFmpeg

|

API documentation is automatically generated from python docstrings and hosted on github pages: https://kkroening.github.io/ffmpeg-python/

|

||||||

|

|

||||||

Before using `ffmpeg-python`, FFmpeg must be installed and accessible via the `$PATH` environment variable.

|

|

||||||

|

|

||||||

There are a variety of ways to install FFmpeg, such as the [official download links](https://ffmpeg.org/download.html), or using your package manager of choice (e.g. `sudo apt install ffmpeg` on Debian/Ubuntu, `brew install ffmpeg` on OS X, etc.).

|

|

||||||

|

|

||||||

Regardless of how FFmpeg is installed, you can check if your environment path is set correctly by running the `ffmpeg` command from the terminal, in which case the version information should appear, as in the following example (truncated for brevity):

|

|

||||||

|

|

||||||

|

Alternatively, standard python help is available, such as at the python REPL prompt as follows:

|

||||||

```

|

```

|

||||||

$ ffmpeg

|

import ffmpeg

|

||||||

ffmpeg version 4.2.4-1ubuntu0.1 Copyright (c) 2000-2020 the FFmpeg developers

|

help(ffmpeg)

|

||||||

built with gcc 9 (Ubuntu 9.3.0-10ubuntu2)

|

|

||||||

```

|

```

|

||||||

|

|

||||||

> **Note**: The actual version information displayed here may vary from one system to another; but if a message such as `ffmpeg: command not found` appears instead of the version information, FFmpeg is not properly installed.

|

|

||||||

|

|

||||||

## [Examples](https://github.com/kkroening/ffmpeg-python/tree/master/examples)

|

|

||||||

|

|

||||||

When in doubt, take a look at the [examples](https://github.com/kkroening/ffmpeg-python/tree/master/examples) to see if there's something that's close to whatever you're trying to do.

|

|

||||||

|

|

||||||

Here are a few:

|

|

||||||

- [Convert video to numpy array](https://github.com/kkroening/ffmpeg-python/blob/master/examples/README.md#convert-video-to-numpy-array)

|

|

||||||

- [Generate thumbnail for video](https://github.com/kkroening/ffmpeg-python/blob/master/examples/README.md#generate-thumbnail-for-video)

|

|

||||||

- [Read raw PCM audio via pipe](https://github.com/kkroening/ffmpeg-python/blob/master/examples/README.md#convert-sound-to-raw-pcm-audio)

|

|

||||||

|

|

||||||

- [JupyterLab/Notebook stream editor](https://github.com/kkroening/ffmpeg-python/blob/master/examples/README.md#jupyter-stream-editor)

|

|

||||||

|

|

||||||

<img src="https://raw.githubusercontent.com/kkroening/ffmpeg-python/master/doc/jupyter-demo.gif" alt="jupyter demo" width="75%" />

|

|

||||||

|

|

||||||

- [Tensorflow/DeepDream streaming](https://github.com/kkroening/ffmpeg-python/blob/master/examples/README.md#tensorflow-streaming)

|

|

||||||

|

|

||||||

<img src="https://raw.githubusercontent.com/kkroening/ffmpeg-python/master/examples/graphs/dream.png" alt="deep dream streaming" width="40%" />

|

|

||||||

|

|

||||||

See the [Examples README](https://github.com/kkroening/ffmpeg-python/tree/master/examples) for additional examples.

|

|

||||||

|

|

||||||

## Custom Filters

|

## Custom Filters

|

||||||

|

|

||||||

Don't see the filter you're looking for? While `ffmpeg-python` includes shorthand notation for some of the most commonly used filters (such as `concat`), all filters can be referenced via the `.filter` operator:

|

Don't see the filter you're looking for? `ffmpeg-python` is a work in progress, but it's easy to use any arbitrary ffmpeg filter:

|

||||||

```python

|

```

|

||||||

stream = ffmpeg.input('dummy.mp4')

|

stream = ffmpeg.input('dummy.mp4')

|

||||||

stream = ffmpeg.filter(stream, 'fps', fps=25, round='up')

|

stream = ffmpeg.filter_(stream, 'fps', fps=25, round='up')

|

||||||

stream = ffmpeg.output(stream, 'dummy2.mp4')

|

stream = ffmpeg.output(stream, 'dummy2.mp4')

|

||||||

ffmpeg.run(stream)

|

ffmpeg.run(stream)

|

||||||

```

|

```

|

||||||

|

|

||||||

Or fluently:

|

Or fluently:

|

||||||

```python

|

```

|

||||||

(

|

(ffmpeg

|

||||||

ffmpeg

|

|

||||||

.input('dummy.mp4')

|

.input('dummy.mp4')

|

||||||

.filter('fps', fps=25, round='up')

|

.filter_('fps', fps=25, round='up')

|

||||||

.output('dummy2.mp4')

|

.output('dummy2.mp4')

|

||||||

.run()

|

.run()

|

||||||

)

|

)

|

||||||

```

|

```

|

||||||

|

|

||||||

**Special option names:**

|

When in doubt, refer to the [existing filters](https://github.com/kkroening/ffmpeg-python/blob/master/ffmpeg/_filters.py) and/or the [official ffmpeg documentation](https://ffmpeg.org/ffmpeg-filters.html).

|

||||||

|

|

||||||

Arguments with special names such as `-qscale:v` (variable bitrate), `-b:v` (constant bitrate), etc. can be specified as a keyword-args dictionary as follows:

|

|

||||||

```python

|

|

||||||

(

|

|

||||||

ffmpeg

|

|

||||||

.input('in.mp4')

|

|

||||||

.output('out.mp4', **{'qscale:v': 3})

|

|

||||||

.run()

|

|

||||||

)

|

|

||||||

```

|

|

||||||

|

|

||||||

**Multiple inputs:**

|

|

||||||

|

|

||||||

Filters that take multiple input streams can be used by passing the input streams as an array to `ffmpeg.filter`:

|

|

||||||

```python

|

|

||||||

main = ffmpeg.input('main.mp4')

|

|

||||||

logo = ffmpeg.input('logo.png')

|

|

||||||

(

|

|

||||||

ffmpeg

|

|

||||||

.filter([main, logo], 'overlay', 10, 10)

|

|

||||||

.output('out.mp4')

|

|

||||||

.run()

|

|

||||||

)

|

|

||||||

```

|

|

||||||

|

|

||||||

**Multiple outputs:**

|

|

||||||

|

|

||||||

Filters that produce multiple outputs can be used with `.filter_multi_output`:

|

|

||||||

```python

|

|

||||||

split = (

|

|

||||||

ffmpeg

|

|

||||||

.input('in.mp4')

|

|

||||||

.filter_multi_output('split') # or `.split()`

|

|

||||||

)

|

|

||||||

(

|

|

||||||

ffmpeg

|

|

||||||

.concat(split[0], split[1].reverse())

|

|

||||||

.output('out.mp4')

|

|

||||||

.run()

|

|

||||||

)

|

|

||||||

```

|

|

||||||

(In this particular case, `.split()` is the equivalent shorthand, but the general approach works for other multi-output filters)

|

|

||||||

|

|

||||||

**String expressions:**

|

|

||||||

|

|

||||||

Expressions to be interpreted by ffmpeg can be included as string parameters and reference any special ffmpeg variable names:

|

|

||||||

```python

|

|

||||||

(

|

|

||||||

ffmpeg

|

|

||||||

.input('in.mp4')

|

|

||||||

.filter('crop', 'in_w-2*10', 'in_h-2*20')

|

|

||||||

.input('out.mp4')

|

|

||||||

)

|

|

||||||

```

|

|

||||||

|

|

||||||

<br />

|

|

||||||

|

|

||||||

When in doubt, refer to the [existing filters](https://github.com/kkroening/ffmpeg-python/blob/master/ffmpeg/_filters.py), [examples](https://github.com/kkroening/ffmpeg-python/tree/master/examples), and/or the [official ffmpeg documentation](https://ffmpeg.org/ffmpeg-filters.html).

|

|

||||||

|

|

||||||

## Frequently asked questions

|

|

||||||

|

|

||||||

**Why do I get an import/attribute/etc. error from `import ffmpeg`?**

|

|

||||||

|

|

||||||

Make sure you ran `pip install ffmpeg-python` and _**not**_ `pip install ffmpeg` (wrong) or `pip install python-ffmpeg` (also wrong).

|

|

||||||

|

|

||||||

**Why did my audio stream get dropped?**

|

|

||||||

|

|

||||||

Some ffmpeg filters drop audio streams, and care must be taken to preserve the audio in the final output. The ``.audio`` and ``.video`` operators can be used to reference the audio/video portions of a stream so that they can be processed separately and then re-combined later in the pipeline.

|

|

||||||

|

|

||||||

This dilemma is intrinsic to ffmpeg, and ffmpeg-python tries to stay out of the way while users may refer to the official ffmpeg documentation as to why certain filters drop audio.

|

|

||||||

|

|

||||||

As usual, take a look at the [examples](https://github.com/kkroening/ffmpeg-python/tree/master/examples#audiovideo-pipeline) (*Audio/video pipeline* in particular).

|

|

||||||

|

|

||||||

**How can I find out the used command line arguments?**

|

|

||||||

|

|

||||||

You can run `stream.get_args()` before `stream.run()` to retrieve the command line arguments that will be passed to `ffmpeg`. You can also run `stream.compile()` that also includes the `ffmpeg` executable as the first argument.

|

|

||||||

|

|

||||||

**How do I do XYZ?**

|

|

||||||

|

|

||||||

Take a look at each of the links in the [Additional Resources](https://kkroening.github.io/ffmpeg-python/) section at the end of this README. If you look everywhere and can't find what you're looking for and have a question that may be relevant to other users, you may open an issue asking how to do it, while providing a thorough explanation of what you're trying to do and what you've tried so far.

|

|

||||||

|

|

||||||

Issues not directly related to `ffmpeg-python` or issues asking others to write your code for you or how to do the work of solving a complex signal processing problem for you that's not relevant to other users will be closed.

|

|

||||||

|

|

||||||

That said, we hope to continue improving our documentation and provide a community of support for people using `ffmpeg-python` to do cool and exciting things.

|

|

||||||

|

|

||||||

## Contributing

|

## Contributing

|

||||||

|

|

||||||

<img align="right" src="https://raw.githubusercontent.com/kkroening/ffmpeg-python/master/doc/logo.png" alt="ffmpeg-python logo" width="20%" />

|

Feel free to report any bugs or feature requests.

|

||||||

|

|

||||||

One of the best things you can do to help make `ffmpeg-python` better is to answer [open questions](https://github.com/kkroening/ffmpeg-python/labels/question) in the issue tracker. The questions that are answered will be tagged and incorporated into the documentation, examples, and other learning resources.

|

It should be fairly easy to use filters that aren't explicitly built into `ffmpeg-python` but if there's a feature or filter you'd really like to see included in the library, don't hesitate to open a feature request.

|

||||||

|

|

||||||

If you notice things that could be better in the documentation or overall development experience, please say so in the [issue tracker](https://github.com/kkroening/ffmpeg-python/issues). And of course, feel free to report any bugs or submit feature requests.

|

Pull requests are welcome as well.

|

||||||

|

|

||||||

Pull requests are welcome as well, but it wouldn't hurt to touch base in the issue tracker or hop on the [Matrix chat channel](https://riot.im/app/#/room/#ffmpeg-python:matrix.org) first.

|

|

||||||

|

|

||||||

Anyone who fixes any of the [open bugs](https://github.com/kkroening/ffmpeg-python/labels/bug) or implements [requested enhancements](https://github.com/kkroening/ffmpeg-python/labels/enhancement) is a hero, but changes should include passing tests.

|

|

||||||

|

|

||||||

### Running tests

|

|

||||||

|

|

||||||

```bash

|

|

||||||

git clone git@github.com:kkroening/ffmpeg-python.git

|

|

||||||

cd ffmpeg-python

|

|

||||||

virtualenv venv

|

|

||||||

. venv/bin/activate # (OS X / Linux)

|

|

||||||

venv\bin\activate # (Windows)

|

|

||||||

pip install -e .[dev]

|

|

||||||

pytest

|

|

||||||

```

|

|

||||||

|

|

||||||

<br />

|

|

||||||

|

|

||||||

### Special thanks

|

|

||||||

|

|

||||||

- [Fabrice Bellard](https://bellard.org/)

|

|

||||||

- [The FFmpeg team](https://ffmpeg.org/donations.html)

|

|

||||||

- [Arne de Laat](https://github.com/153957)

|

|

||||||

- [Davide Depau](https://github.com/depau)

|

|

||||||

- [Dim](https://github.com/lloti)

|

|

||||||

- [Noah Stier](https://github.com/noahstier)

|

|

||||||

|

|

||||||

## Additional Resources

|

## Additional Resources

|

||||||

|

|

||||||

- [API Reference](https://kkroening.github.io/ffmpeg-python/)

|

- [API Reference](https://kkroening.github.io/ffmpeg-python/)

|

||||||

- [Examples](https://github.com/kkroening/ffmpeg-python/tree/master/examples)

|

|

||||||

- [Filters](https://github.com/kkroening/ffmpeg-python/blob/master/ffmpeg/_filters.py)

|

- [Filters](https://github.com/kkroening/ffmpeg-python/blob/master/ffmpeg/_filters.py)

|

||||||

|

- [Tests](https://github.com/kkroening/ffmpeg-python/blob/master/ffmpeg/tests/test_ffmpeg.py)

|

||||||

- [FFmpeg Homepage](https://ffmpeg.org/)

|

- [FFmpeg Homepage](https://ffmpeg.org/)

|

||||||

- [FFmpeg Documentation](https://ffmpeg.org/ffmpeg.html)

|

- [FFmpeg Documentation](https://ffmpeg.org/ffmpeg.html)

|

||||||

- [FFmpeg Filters Documentation](https://ffmpeg.org/ffmpeg-filters.html)

|

- [FFmpeg Filters Documentation](https://ffmpeg.org/ffmpeg-filters.html)

|

||||||

- [Test cases](https://github.com/kkroening/ffmpeg-python/blob/master/ffmpeg/tests/test_ffmpeg.py)

|

|

||||||

- [Issue tracker](https://github.com/kkroening/ffmpeg-python/issues)

|

|

||||||

- Matrix Chat: [#ffmpeg-python:matrix.org](https://riot.im/app/#/room/#ffmpeg-python:matrix.org)

|

|

||||||

|

|||||||

BIN

doc/formula.png

|

Before Width: | Height: | Size: 48 KiB |

BIN

doc/formula.xcf

@ -1,4 +1,4 @@

|

|||||||

# Sphinx build info version 1

|

# Sphinx build info version 1

|

||||||

# This file hashes the configuration used when building these files. When it is not found, a full rebuild will be done.

|

# This file hashes the configuration used when building these files. When it is not found, a full rebuild will be done.

|

||||||

config: f3635c9edf6e9bff1735d57d26069ada

|

config: d3019c15b90af9d4beabe6f0fbc238a9

|

||||||

tags: 645f666f9bcd5a90fca523b33c5a78b7

|

tags: 645f666f9bcd5a90fca523b33c5a78b7

|

||||||

|

|||||||

BIN

doc/html/_static/ajax-loader.gif

Normal file

|

After Width: | Height: | Size: 673 B |

@ -4,7 +4,7 @@

|

|||||||

*

|

*

|

||||||

* Sphinx stylesheet -- basic theme.

|

* Sphinx stylesheet -- basic theme.

|

||||||

*

|

*

|

||||||

* :copyright: Copyright 2007-2019 by the Sphinx team, see AUTHORS.

|

* :copyright: Copyright 2007-2017 by the Sphinx team, see AUTHORS.

|

||||||

* :license: BSD, see LICENSE for details.

|

* :license: BSD, see LICENSE for details.

|

||||||

*

|

*

|

||||||

*/

|

*/

|

||||||

@ -81,26 +81,10 @@ div.sphinxsidebar input {

|

|||||||

font-size: 1em;

|

font-size: 1em;

|

||||||

}

|

}

|

||||||

|

|

||||||

div.sphinxsidebar #searchbox form.search {

|

|

||||||

overflow: hidden;

|

|

||||||

}

|

|

||||||

|

|

||||||

div.sphinxsidebar #searchbox input[type="text"] {

|

div.sphinxsidebar #searchbox input[type="text"] {

|

||||||

float: left;

|

width: 170px;

|

||||||

width: 80%;

|

|

||||||

padding: 0.25em;

|

|

||||||

box-sizing: border-box;

|

|

||||||

}

|

}

|

||||||

|

|

||||||

div.sphinxsidebar #searchbox input[type="submit"] {

|

|

||||||

float: left;

|

|

||||||

width: 20%;

|

|

||||||

border-left: none;

|

|

||||||

padding: 0.25em;

|

|

||||||

box-sizing: border-box;

|

|

||||||

}

|

|

||||||

|

|

||||||

|

|

||||||

img {

|

img {

|

||||||

border: 0;

|

border: 0;

|

||||||

max-width: 100%;

|

max-width: 100%;

|

||||||

@ -215,11 +199,6 @@ table.modindextable td {

|

|||||||

|

|

||||||

/* -- general body styles --------------------------------------------------- */

|

/* -- general body styles --------------------------------------------------- */

|

||||||

|

|

||||||

div.body {

|

|

||||||

min-width: 450px;

|

|

||||||

max-width: 800px;

|

|

||||||

}

|

|

||||||

|

|

||||||

div.body p, div.body dd, div.body li, div.body blockquote {

|

div.body p, div.body dd, div.body li, div.body blockquote {

|

||||||

-moz-hyphens: auto;

|

-moz-hyphens: auto;

|

||||||

-ms-hyphens: auto;

|

-ms-hyphens: auto;

|

||||||

@ -231,16 +210,6 @@ a.headerlink {

|

|||||||

visibility: hidden;

|

visibility: hidden;

|

||||||

}

|

}

|

||||||

|

|

||||||

a.brackets:before,

|

|

||||||

span.brackets > a:before{

|

|

||||||

content: "[";

|

|

||||||

}

|

|

||||||

|

|

||||||

a.brackets:after,

|

|

||||||

span.brackets > a:after {

|

|

||||||

content: "]";

|

|

||||||

}

|

|

||||||

|

|

||||||

h1:hover > a.headerlink,

|

h1:hover > a.headerlink,

|

||||||

h2:hover > a.headerlink,

|

h2:hover > a.headerlink,

|

||||||

h3:hover > a.headerlink,

|

h3:hover > a.headerlink,

|

||||||

@ -289,12 +258,6 @@ img.align-center, .figure.align-center, object.align-center {

|

|||||||

margin-right: auto;

|

margin-right: auto;

|

||||||

}

|

}

|

||||||

|

|

||||||

img.align-default, .figure.align-default {

|

|

||||||

display: block;

|

|

||||||

margin-left: auto;

|

|

||||||

margin-right: auto;

|

|

||||||

}

|

|

||||||

|

|

||||||

.align-left {

|

.align-left {

|

||||||

text-align: left;

|

text-align: left;

|

||||||

}

|

}

|

||||||

@ -303,10 +266,6 @@ img.align-default, .figure.align-default {

|

|||||||

text-align: center;

|

text-align: center;

|

||||||

}

|

}

|

||||||

|

|

||||||

.align-default {

|

|

||||||

text-align: center;

|

|

||||||

}

|

|

||||||

|

|

||||||

.align-right {

|

.align-right {

|

||||||

text-align: right;

|

text-align: right;

|

||||||

}

|

}

|

||||||

@ -373,16 +332,6 @@ table.docutils {

|

|||||||

border-collapse: collapse;

|

border-collapse: collapse;

|

||||||

}

|

}

|

||||||

|

|

||||||

table.align-center {

|

|

||||||

margin-left: auto;

|

|

||||||

margin-right: auto;

|

|

||||||

}

|

|

||||||

|

|

||||||

table.align-default {

|

|

||||||

margin-left: auto;

|

|

||||||

margin-right: auto;

|

|

||||||

}

|

|

||||||

|

|

||||||

table caption span.caption-number {

|

table caption span.caption-number {

|

||||||

font-style: italic;

|

font-style: italic;

|

||||||

}

|

}

|

||||||

@ -416,16 +365,6 @@ table.citation td {

|

|||||||

border-bottom: none;

|

border-bottom: none;

|

||||||

}

|

}

|

||||||

|

|

||||||

th > p:first-child,

|

|

||||||

td > p:first-child {

|

|

||||||

margin-top: 0px;

|

|

||||||

}

|

|

||||||

|

|

||||||

th > p:last-child,

|

|

||||||

td > p:last-child {

|

|

||||||

margin-bottom: 0px;

|

|

||||||

}

|

|

||||||

|

|

||||||

/* -- figures --------------------------------------------------------------- */

|

/* -- figures --------------------------------------------------------------- */

|

||||||

|

|

||||||

div.figure {

|

div.figure {

|

||||||

@ -466,13 +405,6 @@ table.field-list td, table.field-list th {

|

|||||||

hyphens: manual;

|

hyphens: manual;

|

||||||

}

|

}

|

||||||

|

|

||||||

/* -- hlist styles ---------------------------------------------------------- */

|

|

||||||

|

|

||||||

table.hlist td {

|

|

||||||

vertical-align: top;

|

|

||||||

}

|

|

||||||

|

|

||||||

|

|

||||||

/* -- other body styles ----------------------------------------------------- */

|

/* -- other body styles ----------------------------------------------------- */

|

||||||

|

|

||||||

ol.arabic {

|

ol.arabic {

|

||||||

@ -495,57 +427,11 @@ ol.upperroman {

|

|||||||

list-style: upper-roman;

|

list-style: upper-roman;

|

||||||

}

|

}

|

||||||

|

|

||||||

li > p:first-child {

|

|

||||||

margin-top: 0px;

|

|

||||||

}

|

|

||||||

|

|

||||||

li > p:last-child {

|

|

||||||

margin-bottom: 0px;

|

|

||||||

}

|

|

||||||

|

|

||||||

dl.footnote > dt,

|

|

||||||

dl.citation > dt {

|

|

||||||

float: left;

|

|

||||||

}

|

|

||||||

|

|

||||||

dl.footnote > dd,

|

|

||||||

dl.citation > dd {

|

|

||||||

margin-bottom: 0em;

|

|

||||||

}

|

|

||||||

|

|

||||||

dl.footnote > dd:after,

|

|

||||||

dl.citation > dd:after {

|

|

||||||

content: "";

|

|

||||||

clear: both;

|

|

||||||

}

|

|

||||||

|

|

||||||

dl.field-list {

|

|

||||||

display: flex;

|

|

||||||

flex-wrap: wrap;

|

|

||||||

}

|

|

||||||

|

|

||||||

dl.field-list > dt {

|

|

||||||

flex-basis: 20%;

|

|

||||||

font-weight: bold;

|

|

||||||

word-break: break-word;

|

|

||||||

}

|

|

||||||

|

|

||||||

dl.field-list > dt:after {

|

|

||||||

content: ":";

|

|

||||||

}

|

|

||||||

|

|

||||||

dl.field-list > dd {

|

|

||||||

flex-basis: 70%;

|

|

||||||

padding-left: 1em;

|

|

||||||

margin-left: 0em;

|

|

||||||

margin-bottom: 0em;

|

|

||||||

}

|

|

||||||

|

|

||||||

dl {

|

dl {

|

||||||

margin-bottom: 15px;

|

margin-bottom: 15px;

|

||||||

}

|

}

|

||||||

|

|

||||||

dd > p:first-child {

|

dd p {

|

||||||

margin-top: 0px;

|

margin-top: 0px;

|

||||||

}

|

}

|

||||||

|

|

||||||

@ -559,14 +445,10 @@ dd {

|

|||||||

margin-left: 30px;

|

margin-left: 30px;

|

||||||

}

|

}

|

||||||

|

|

||||||

dt:target, span.highlighted {

|

dt:target, .highlighted {

|

||||||

background-color: #fbe54e;

|

background-color: #fbe54e;

|

||||||

}

|

}

|

||||||

|

|

||||||

rect.highlighted {

|

|

||||||

fill: #fbe54e;

|

|

||||||

}

|

|

||||||

|

|

||||||

dl.glossary dt {

|

dl.glossary dt {

|

||||||

font-weight: bold;

|

font-weight: bold;

|

||||||

font-size: 1.1em;

|

font-size: 1.1em;

|

||||||

@ -618,12 +500,6 @@ dl.glossary dt {

|

|||||||

font-style: oblique;

|

font-style: oblique;

|

||||||

}

|

}

|

||||||

|

|

||||||

.classifier:before {

|

|

||||||

font-style: normal;

|

|

||||||

margin: 0.5em;

|

|

||||||

content: ":";

|

|

||||||

}

|

|

||||||

|

|

||||||

abbr, acronym {

|

abbr, acronym {

|

||||||

border-bottom: dotted 1px;

|

border-bottom: dotted 1px;

|

||||||

cursor: help;

|

cursor: help;

|

||||||

|

|||||||

BIN

doc/html/_static/comment-bright.png

Normal file

|

After Width: | Height: | Size: 756 B |

BIN

doc/html/_static/comment-close.png

Normal file

|

After Width: | Height: | Size: 829 B |

BIN

doc/html/_static/comment.png

Normal file

|

After Width: | Height: | Size: 641 B |

@ -4,7 +4,7 @@

|

|||||||

*

|

*

|

||||||

* Sphinx JavaScript utilities for all documentation.

|

* Sphinx JavaScript utilities for all documentation.

|

||||||

*

|

*

|

||||||

* :copyright: Copyright 2007-2019 by the Sphinx team, see AUTHORS.

|

* :copyright: Copyright 2007-2017 by the Sphinx team, see AUTHORS.

|

||||||

* :license: BSD, see LICENSE for details.

|

* :license: BSD, see LICENSE for details.

|

||||||

*

|

*

|

||||||

*/

|

*/

|

||||||

@ -45,7 +45,7 @@ jQuery.urlencode = encodeURIComponent;

|

|||||||

* it will always return arrays of strings for the value parts.

|

* it will always return arrays of strings for the value parts.

|

||||||

*/

|

*/

|

||||||

jQuery.getQueryParameters = function(s) {

|

jQuery.getQueryParameters = function(s) {

|

||||||

if (typeof s === 'undefined')

|

if (typeof s == 'undefined')

|

||||||

s = document.location.search;

|

s = document.location.search;

|

||||||

var parts = s.substr(s.indexOf('?') + 1).split('&');

|

var parts = s.substr(s.indexOf('?') + 1).split('&');

|

||||||

var result = {};

|

var result = {};

|

||||||

@ -66,54 +66,29 @@ jQuery.getQueryParameters = function(s) {

|

|||||||

* span elements with the given class name.

|

* span elements with the given class name.

|

||||||

*/

|

*/

|

||||||

jQuery.fn.highlightText = function(text, className) {

|

jQuery.fn.highlightText = function(text, className) {

|

||||||

function highlight(node, addItems) {

|

function highlight(node) {

|

||||||

if (node.nodeType === 3) {

|

if (node.nodeType == 3) {

|

||||||

var val = node.nodeValue;

|

var val = node.nodeValue;

|

||||||

var pos = val.toLowerCase().indexOf(text);

|

var pos = val.toLowerCase().indexOf(text);

|

||||||

if (pos >= 0 &&

|

if (pos >= 0 && !jQuery(node.parentNode).hasClass(className)) {

|

||||||

!jQuery(node.parentNode).hasClass(className) &&

|

var span = document.createElement("span");

|

||||||

!jQuery(node.parentNode).hasClass("nohighlight")) {

|

span.className = className;

|

||||||

var span;

|

|

||||||

var isInSVG = jQuery(node).closest("body, svg, foreignObject").is("svg");

|

|

||||||

if (isInSVG) {

|

|

||||||

span = document.createElementNS("http://www.w3.org/2000/svg", "tspan");

|

|

||||||

} else {

|

|

||||||

span = document.createElement("span");

|

|

||||||

span.className = className;

|

|

||||||

}

|

|

||||||

span.appendChild(document.createTextNode(val.substr(pos, text.length)));

|

span.appendChild(document.createTextNode(val.substr(pos, text.length)));

|

||||||

node.parentNode.insertBefore(span, node.parentNode.insertBefore(

|

node.parentNode.insertBefore(span, node.parentNode.insertBefore(

|

||||||

document.createTextNode(val.substr(pos + text.length)),

|

document.createTextNode(val.substr(pos + text.length)),

|

||||||

node.nextSibling));

|

node.nextSibling));

|

||||||

node.nodeValue = val.substr(0, pos);

|

node.nodeValue = val.substr(0, pos);

|

||||||

if (isInSVG) {

|

|

||||||

var rect = document.createElementNS("http://www.w3.org/2000/svg", "rect");

|

|

||||||

var bbox = node.parentElement.getBBox();

|

|

||||||

rect.x.baseVal.value = bbox.x;

|

|

||||||

rect.y.baseVal.value = bbox.y;

|

|

||||||

rect.width.baseVal.value = bbox.width;

|

|

||||||

rect.height.baseVal.value = bbox.height;

|

|

||||||

rect.setAttribute('class', className);

|

|

||||||

addItems.push({

|

|

||||||

"parent": node.parentNode,

|

|

||||||

"target": rect});

|

|

||||||

}

|

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

else if (!jQuery(node).is("button, select, textarea")) {

|

else if (!jQuery(node).is("button, select, textarea")) {

|

||||||

jQuery.each(node.childNodes, function() {

|

jQuery.each(node.childNodes, function() {

|

||||||

highlight(this, addItems);

|

highlight(this);

|

||||||

});

|

});

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

var addItems = [];

|

return this.each(function() {

|

||||||

var result = this.each(function() {

|

highlight(this);

|

||||||

highlight(this, addItems);

|

|

||||||

});

|

});

|

||||||

for (var i = 0; i < addItems.length; ++i) {

|

|

||||||

jQuery(addItems[i].parent).before(addItems[i].target);

|

|

||||||

}

|

|

||||||

return result;

|

|

||||||

};

|

};

|

||||||

|

|

||||||

/*

|

/*

|

||||||

@ -149,30 +124,28 @@ var Documentation = {

|

|||||||

this.fixFirefoxAnchorBug();

|

this.fixFirefoxAnchorBug();

|

||||||

this.highlightSearchWords();

|

this.highlightSearchWords();

|

||||||

this.initIndexTable();

|

this.initIndexTable();

|

||||||

if (DOCUMENTATION_OPTIONS.NAVIGATION_WITH_KEYS) {

|

|

||||||

this.initOnKeyListeners();

|

|

||||||

}

|

|

||||||

},

|

},

|

||||||

|

|

||||||

/**

|

/**

|

||||||

* i18n support

|

* i18n support

|

||||||

*/

|

*/

|

||||||

TRANSLATIONS : {},

|

TRANSLATIONS : {},

|

||||||

PLURAL_EXPR : function(n) { return n === 1 ? 0 : 1; },

|

PLURAL_EXPR : function(n) { return n == 1 ? 0 : 1; },

|

||||||

LOCALE : 'unknown',

|

LOCALE : 'unknown',

|

||||||

|

|

||||||

// gettext and ngettext don't access this so that the functions

|

// gettext and ngettext don't access this so that the functions

|

||||||

// can safely bound to a different name (_ = Documentation.gettext)

|

// can safely bound to a different name (_ = Documentation.gettext)

|

||||||

gettext : function(string) {

|

gettext : function(string) {

|

||||||

var translated = Documentation.TRANSLATIONS[string];

|

var translated = Documentation.TRANSLATIONS[string];

|

||||||

if (typeof translated === 'undefined')

|

if (typeof translated == 'undefined')

|

||||||

return string;

|

return string;

|

||||||

return (typeof translated === 'string') ? translated : translated[0];

|

return (typeof translated == 'string') ? translated : translated[0];

|

||||||

},

|

},

|

||||||

|

|

||||||

ngettext : function(singular, plural, n) {

|

ngettext : function(singular, plural, n) {

|

||||||

var translated = Documentation.TRANSLATIONS[singular];

|

var translated = Documentation.TRANSLATIONS[singular];

|

||||||

if (typeof translated === 'undefined')

|

if (typeof translated == 'undefined')

|

||||||

return (n == 1) ? singular : plural;

|

return (n == 1) ? singular : plural;

|

||||||

return translated[Documentation.PLURALEXPR(n)];

|

return translated[Documentation.PLURALEXPR(n)];

|

||||||

},

|

},

|

||||||

@ -207,7 +180,7 @@ var Documentation = {

|

|||||||

* see: https://bugzilla.mozilla.org/show_bug.cgi?id=645075

|

* see: https://bugzilla.mozilla.org/show_bug.cgi?id=645075

|

||||||

*/

|

*/

|

||||||

fixFirefoxAnchorBug : function() {

|

fixFirefoxAnchorBug : function() {

|

||||||

if (document.location.hash && $.browser.mozilla)

|

if (document.location.hash)

|

||||||

window.setTimeout(function() {

|

window.setTimeout(function() {

|

||||||

document.location.href += '';

|

document.location.href += '';

|

||||||

}, 10);

|

}, 10);

|

||||||

@ -243,7 +216,7 @@ var Documentation = {

|

|||||||

var src = $(this).attr('src');

|

var src = $(this).attr('src');

|

||||||

var idnum = $(this).attr('id').substr(7);

|

var idnum = $(this).attr('id').substr(7);

|

||||||

$('tr.cg-' + idnum).toggle();

|

$('tr.cg-' + idnum).toggle();

|

||||||

if (src.substr(-9) === 'minus.png')

|

if (src.substr(-9) == 'minus.png')

|

||||||

$(this).attr('src', src.substr(0, src.length-9) + 'plus.png');

|

$(this).attr('src', src.substr(0, src.length-9) + 'plus.png');

|

||||||

else

|

else

|

||||||

$(this).attr('src', src.substr(0, src.length-8) + 'minus.png');

|

$(this).attr('src', src.substr(0, src.length-8) + 'minus.png');

|

||||||

@ -275,7 +248,7 @@ var Documentation = {

|

|||||||

var path = document.location.pathname;

|

var path = document.location.pathname;

|

||||||

var parts = path.split(/\//);

|

var parts = path.split(/\//);

|

||||||

$.each(DOCUMENTATION_OPTIONS.URL_ROOT.split(/\//), function() {

|

$.each(DOCUMENTATION_OPTIONS.URL_ROOT.split(/\//), function() {

|

||||||

if (this === '..')

|

if (this == '..')

|

||||||

parts.pop();

|

parts.pop();

|

||||||

});

|

});

|

||||||

var url = parts.join('/');

|

var url = parts.join('/');

|

||||||

|

|||||||

@ -1,10 +0,0 @@

|

|||||||

var DOCUMENTATION_OPTIONS = {

|

|

||||||

URL_ROOT: document.getElementById("documentation_options").getAttribute('data-url_root'),

|

|

||||||

VERSION: '',

|

|

||||||

LANGUAGE: 'None',

|

|

||||||

COLLAPSE_INDEX: false,

|

|

||||||

FILE_SUFFIX: '.html',

|

|

||||||

HAS_SOURCE: true,

|

|

||||||

SOURCELINK_SUFFIX: '.txt',

|

|

||||||

NAVIGATION_WITH_KEYS: false

|

|

||||||

};

|

|

||||||

BIN

doc/html/_static/down-pressed.png

Normal file

|

After Width: | Height: | Size: 222 B |

BIN

doc/html/_static/down.png

Normal file

|

After Width: | Height: | Size: 202 B |

8

doc/html/_static/jquery.js

vendored

@ -1,297 +0,0 @@

|

|||||||

/*

|

|

||||||

* language_data.js

|

|

||||||

* ~~~~~~~~~~~~~~~~

|

|

||||||

*

|

|

||||||

* This script contains the language-specific data used by searchtools.js,

|

|

||||||

* namely the list of stopwords, stemmer, scorer and splitter.

|

|

||||||

*

|

|

||||||

* :copyright: Copyright 2007-2019 by the Sphinx team, see AUTHORS.

|

|

||||||

* :license: BSD, see LICENSE for details.

|

|

||||||

*

|

|

||||||

*/

|

|

||||||

|

|

||||||

var stopwords = ["a","and","are","as","at","be","but","by","for","if","in","into","is","it","near","no","not","of","on","or","such","that","the","their","then","there","these","they","this","to","was","will","with"];

|

|

||||||

|

|

||||||

|

|

||||||

/* Non-minified version JS is _stemmer.js if file is provided */

|

|

||||||

/**

|

|

||||||

* Porter Stemmer

|

|

||||||

*/

|

|

||||||

var Stemmer = function() {

|

|

||||||

|

|

||||||

var step2list = {

|

|

||||||

ational: 'ate',

|

|

||||||

tional: 'tion',

|

|

||||||

enci: 'ence',

|

|

||||||

anci: 'ance',

|

|

||||||

izer: 'ize',

|

|

||||||

bli: 'ble',

|

|

||||||

alli: 'al',

|

|

||||||

entli: 'ent',

|

|

||||||

eli: 'e',

|

|

||||||