diff --git a/examples/README.md b/examples/README.md

index 92feaec..5d919f7 100644

--- a/examples/README.md

+++ b/examples/README.md

@@ -123,3 +123,59 @@ out.run()

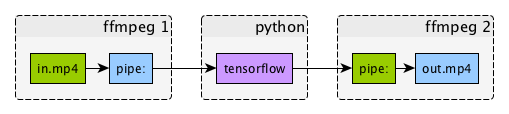

+## [Tensorflow Streaming](https://github.com/kkroening/ffmpeg-python/blob/master/examples/tensorflow_stream.py)

+

+

+## [Tensorflow Streaming](https://github.com/kkroening/ffmpeg-python/blob/master/examples/tensorflow_stream.py)

+

+ +

+- Decode input video with ffmpeg

+- Process video with tensorflow using "deep dream" example

+- Encode output video with ffmpeg

+

+```python

+args1 = (

+ ffmpeg

+ .input(in_filename)

+ .output('pipe:', format='rawvideo', pix_fmt='rgb24', vframes=8)

+ .compile()

+)

+process1 = subprocess.Popen(args1, stdout=subprocess.PIPE)

+

+args2 = (

+ ffmpeg

+ .input('pipe:', format='rawvideo', pix_fmt='rgb24', s='{}x{}'.format(width, height))

+ .output(out_filename, pix_fmt='yuv420p')

+ .overwrite_output()

+ .compile()

+)

+process2 = subprocess.Popen(args2, stdin=subprocess.PIPE)

+

+while True:

+ in_bytes = process1.stdout.read(width * height * 3)

+ in_frame (

+ np

+ .frombuffer(in_bytes, np.uint8)

+ .reshape([height, width, 3])

+ )

+

+ # See examples/tensorflow_stream.py:

+ frame = deep_dream.process_frame(frame)

+

+ process2.stdin.write(

+ frame

+ .astype(np.uint8)

+ .tobytes()

+ )

+```

+

+

+

+- Decode input video with ffmpeg

+- Process video with tensorflow using "deep dream" example

+- Encode output video with ffmpeg

+

+```python

+args1 = (

+ ffmpeg

+ .input(in_filename)

+ .output('pipe:', format='rawvideo', pix_fmt='rgb24', vframes=8)

+ .compile()

+)

+process1 = subprocess.Popen(args1, stdout=subprocess.PIPE)

+

+args2 = (

+ ffmpeg

+ .input('pipe:', format='rawvideo', pix_fmt='rgb24', s='{}x{}'.format(width, height))

+ .output(out_filename, pix_fmt='yuv420p')

+ .overwrite_output()

+ .compile()

+)

+process2 = subprocess.Popen(args2, stdin=subprocess.PIPE)

+

+while True:

+ in_bytes = process1.stdout.read(width * height * 3)

+ in_frame (

+ np

+ .frombuffer(in_bytes, np.uint8)

+ .reshape([height, width, 3])

+ )

+

+ # See examples/tensorflow_stream.py:

+ frame = deep_dream.process_frame(frame)

+

+ process2.stdin.write(

+ frame

+ .astype(np.uint8)

+ .tobytes()

+ )

+```

+

+ +

+## [FaceTime webcam input](https://github.com/kkroening/ffmpeg-python/blob/master/examples/facetime.py)

+

+```python

+(

+ ffmpeg

+ .input('FaceTime', format='avfoundation', pix_fmt='uyvy422', framerate=30)

+ .output('out.mp4', pix_fmt='yuv420p', vframes=100)

+ .run()

+)

+```

+

+## [FaceTime webcam input](https://github.com/kkroening/ffmpeg-python/blob/master/examples/facetime.py)

+

+```python

+(

+ ffmpeg

+ .input('FaceTime', format='avfoundation', pix_fmt='uyvy422', framerate=30)

+ .output('out.mp4', pix_fmt='yuv420p', vframes=100)

+ .run()

+)

+```

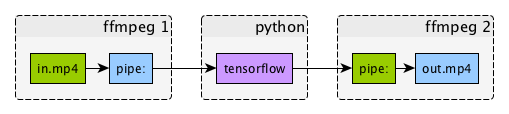

+## [Tensorflow Streaming](https://github.com/kkroening/ffmpeg-python/blob/master/examples/tensorflow_stream.py)

+

+

+## [Tensorflow Streaming](https://github.com/kkroening/ffmpeg-python/blob/master/examples/tensorflow_stream.py)

+

+ +

+- Decode input video with ffmpeg

+- Process video with tensorflow using "deep dream" example

+- Encode output video with ffmpeg

+

+```python

+args1 = (

+ ffmpeg

+ .input(in_filename)

+ .output('pipe:', format='rawvideo', pix_fmt='rgb24', vframes=8)

+ .compile()

+)

+process1 = subprocess.Popen(args1, stdout=subprocess.PIPE)

+

+args2 = (

+ ffmpeg

+ .input('pipe:', format='rawvideo', pix_fmt='rgb24', s='{}x{}'.format(width, height))

+ .output(out_filename, pix_fmt='yuv420p')

+ .overwrite_output()

+ .compile()

+)

+process2 = subprocess.Popen(args2, stdin=subprocess.PIPE)

+

+while True:

+ in_bytes = process1.stdout.read(width * height * 3)

+ in_frame (

+ np

+ .frombuffer(in_bytes, np.uint8)

+ .reshape([height, width, 3])

+ )

+

+ # See examples/tensorflow_stream.py:

+ frame = deep_dream.process_frame(frame)

+

+ process2.stdin.write(

+ frame

+ .astype(np.uint8)

+ .tobytes()

+ )

+```

+

+

+

+- Decode input video with ffmpeg

+- Process video with tensorflow using "deep dream" example

+- Encode output video with ffmpeg

+

+```python

+args1 = (

+ ffmpeg

+ .input(in_filename)

+ .output('pipe:', format='rawvideo', pix_fmt='rgb24', vframes=8)

+ .compile()

+)

+process1 = subprocess.Popen(args1, stdout=subprocess.PIPE)

+

+args2 = (

+ ffmpeg

+ .input('pipe:', format='rawvideo', pix_fmt='rgb24', s='{}x{}'.format(width, height))

+ .output(out_filename, pix_fmt='yuv420p')

+ .overwrite_output()

+ .compile()

+)

+process2 = subprocess.Popen(args2, stdin=subprocess.PIPE)

+

+while True:

+ in_bytes = process1.stdout.read(width * height * 3)

+ in_frame (

+ np

+ .frombuffer(in_bytes, np.uint8)

+ .reshape([height, width, 3])

+ )

+

+ # See examples/tensorflow_stream.py:

+ frame = deep_dream.process_frame(frame)

+

+ process2.stdin.write(

+ frame

+ .astype(np.uint8)

+ .tobytes()

+ )

+```

+

+ +

+## [FaceTime webcam input](https://github.com/kkroening/ffmpeg-python/blob/master/examples/facetime.py)

+

+```python

+(

+ ffmpeg

+ .input('FaceTime', format='avfoundation', pix_fmt='uyvy422', framerate=30)

+ .output('out.mp4', pix_fmt='yuv420p', vframes=100)

+ .run()

+)

+```

+

+## [FaceTime webcam input](https://github.com/kkroening/ffmpeg-python/blob/master/examples/facetime.py)

+

+```python

+(

+ ffmpeg

+ .input('FaceTime', format='avfoundation', pix_fmt='uyvy422', framerate=30)

+ .output('out.mp4', pix_fmt='yuv420p', vframes=100)

+ .run()

+)

+```